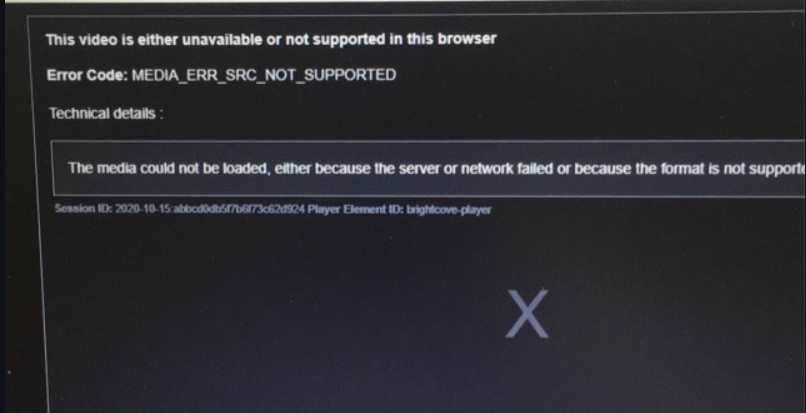

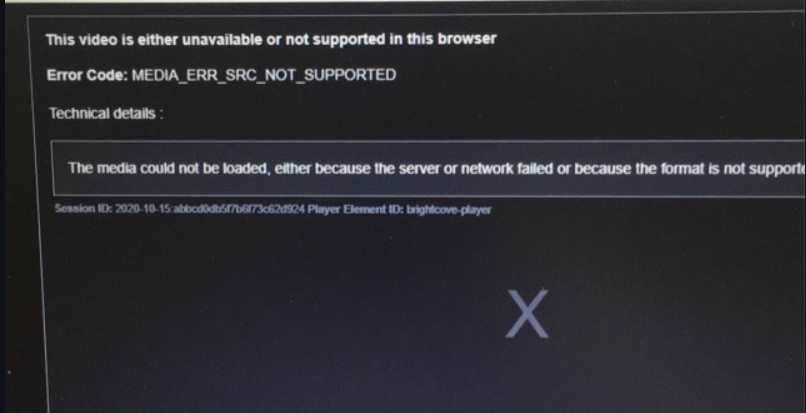

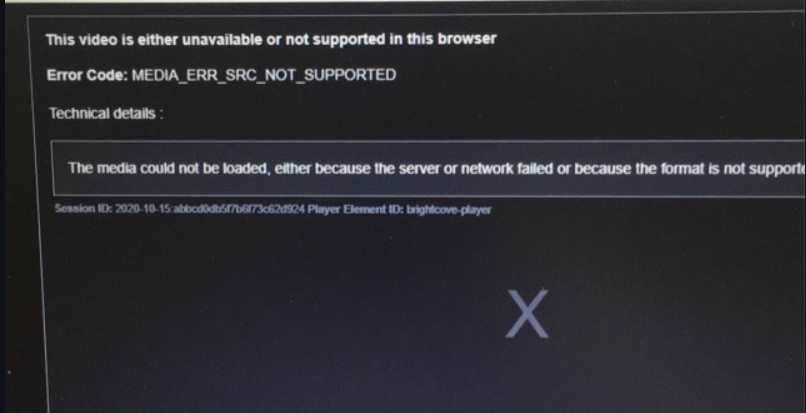

Браузер Opera поддерживает воспроизведение мультимедиа, однако не все форматы мультимедиа поддерживаются браузером, и иногда исходный сайт не позволяет воспроизведение. Когда мы пытаемся воспроизвести эти ограниченные форматы файлов или источники в Opera, выдается ошибка «MEDIA_ERR_SRC_NOT_SUPPORTED». Мы можем попробовать несколько настроек, чтобы исправить эту ошибку браузера.

Среди старейших браузеров Opera создала для себя огромный сегмент преданных фанатов. И причина того же вряд ли является секретом. Благодаря множеству встроенных функций, плавному пользовательскому интерфейсу и тому факту, что он основан на исходном коде Chromium, все, похоже, творило чудеса для этого.

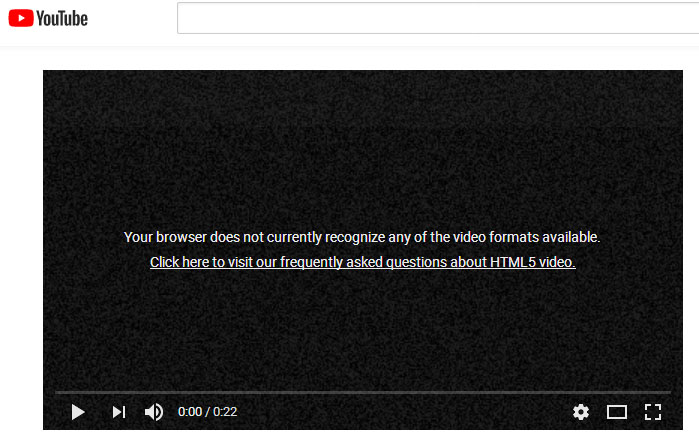

Однако, когда дело доходит до обработки и воспроизведения встроенных видео с веб-сайта, это, похоже, доставляет пользователям неудобства. Было много жалобы от пользователей относительно их неспособности воспроизвести видео.

Когда они пытаются это сделать, вместо этого их приветствует сообщение, в котором говорится: «Это видео либо недоступно, либо не поддерживается в этом браузере. Наряду с этим браузер Opera также выдает код ошибки: MEDIA_ERR_SRC_NOT_SUPPORTED.

Связанный: Как очистить историю, файлы cookie и кеш в браузере Opera?

Что ж, если вы тоже сталкиваетесь с этой проблемой, не волнуйтесь. В этом руководстве мы поделились несколькими изящными обходными путями, которые помогут вам исправить эту ошибку. Итак, без лишних слов, давайте проверим их.

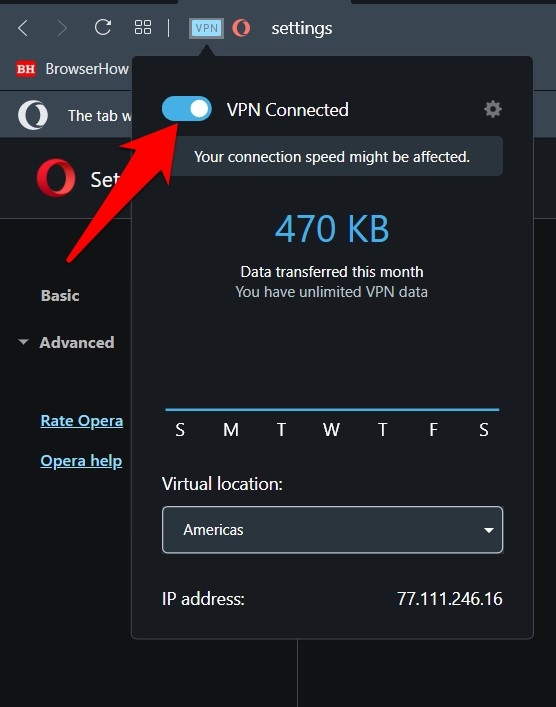

Отключить Opera VPN

Opera поставляется со встроенным VPN, который позволяет направлять сетевой трафик через чужой сервер и анонимно просматривать веб-страницы. Однако в некоторых случаях веб-сайт может не отправлять данные исходному клиенту, если он работает в виртуальной частной сети.

Поэтому, если вы включили встроенный в браузер VPN-сервис, лучше отключить его сейчас.

Вот шаги, чтобы отключить VPN-соединение в браузере Opera:

- Запустите браузер Opera на компьютере.

- Щелкните значок VPN, расположенный слева от адресной строки.

- Отключите переключатель рядом с VPN Connected, и ваша задача будет выполнена.

Теперь попробуйте воспроизвести видео по вашему выбору и проверьте, была ли устранена основная проблема.

В то время как VPN поставляется с множеством плюсов, включая тот факт, что вы можете получить доступ к контенту с географическим ограничением, некоторые веб-сайты могут не работать правильно, если у вас включен VPN. Так что лучше держать эту виртуальную сеть выключенной, пока вы взаимодействуете с такими сайтами.

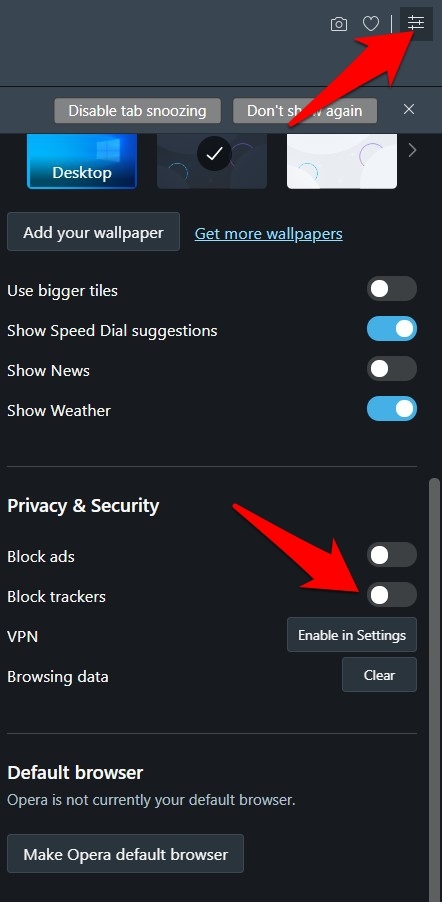

Включить трекеры

Трекеры следят за вашими ежедневными действиями в браузере, чтобы они могли отправлять вам персонализированный контент. Однако многие пользователи видят в этом угрозу конфиденциальности и предпочитают отключать их.

Но это может, хотя и редко, привести к взлому некоторых сайтов. Поэтому вы можете рассмотреть возможность включения этих трекеров, чтобы они могли выполнять свои функции по умолчанию.

Вот шаги, чтобы включить функционал трекера в браузере Opera:

- Запустите браузер Opera на вашем ПК.

- Нажмите на меню

расположен вверху справа.

- Прокрутите до Конфиденциальность и безопасность раздел.

- Отключите переключатель блокировки трекеров.

Это отключит блокировку трекера в опере. Теперь обновите сайт, который не может воспроизвести видео, и проверьте, исправляет ли он ошибку Media_err_src_not_supported в Opera.

Включение трекеров может означать менее безопасную среду просмотра, поэтому вам придется принять этот компромисс соответственно.

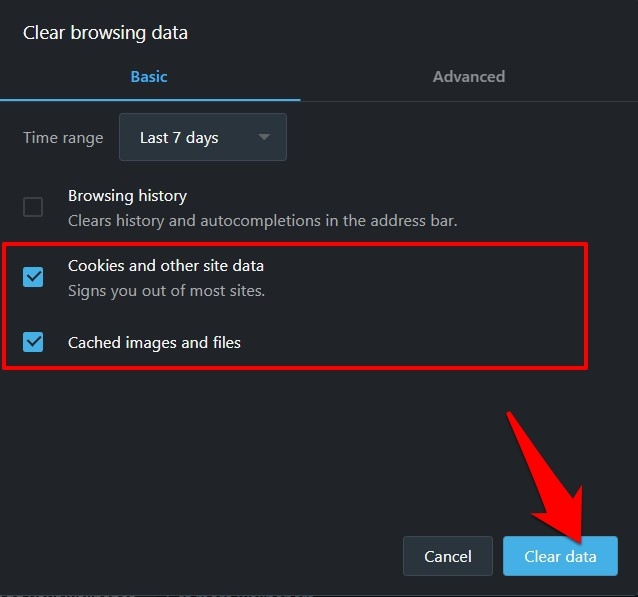

Очистить кеш и данные

Всегда полезно сохранить как минимум временные данные. Они могут не только замедлить работу всего браузера, но также могут иметь негативные последствия для правильной загрузки некоторых сайтов. Поэтому было бы лучше, если бы вы рассматривали возможность удаления кеша и данных браузера через регулярные промежутки времени.

Вот шаги, чтобы очистить кеш и данные cookie в браузере Opera:

- Запустите компьютерный браузер Opera.

- Нажмите сочетание клавиш Ctrl + Shift + Del.

Откроется диалоговое окно «Очистить данные просмотра». - Установите флажки «Файлы cookie и другие данные сайта» и установите флажки «Кэшированные изображения и файлы».

- Нажмите «Очистить данные» и дождитесь завершения процесса.

Попробуйте воспроизвести соответствующее видео и проверьте, решена ли проблема.

Очистка этих данных может вывести вас из различных сайтов, но это всего лишь одноразовый сценарий. Как только вы войдете на сайты, данные cookie будут снова заполнены.

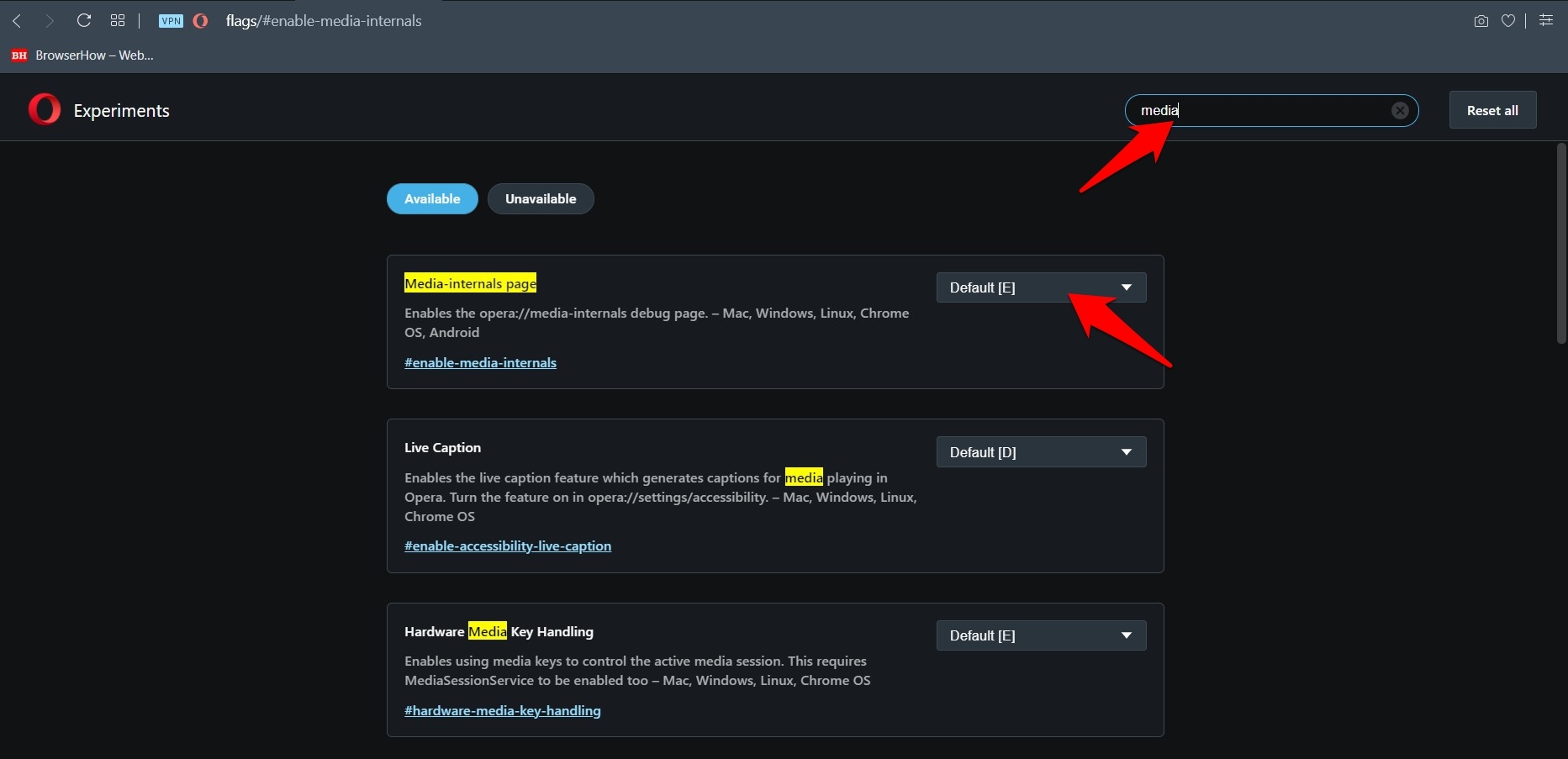

Отключить флаги мультимедиа

В Opera есть отдельный раздел «Флаги», который используется для тестирования экспериментальных функций. Многие технические энтузиасты обычно пытаются опробовать новейшие передовые функции раньше всех.

Однако, будучи экспериментальной, флаги, вероятно, могут нарушить нормальную работу браузера. Поэтому, если вы включили любой такой флаг, принадлежащий домену Media, подумайте о том, чтобы вернуть его в состояние по умолчанию.

Вот шаги, чтобы настроить флаги браузера Opera:

- Запустите браузер Opera на компьютере.

- Откройте страницу opera: // flags / в браузере.

- Печатать СМИ в строке поиска.

Он внесет все флаги, связанные с мультимедиа, убедитесь, что для всех таких флагов установлено значение по умолчанию. - Если вы видите что-либо как Включено или же Отключено, затем измените его на значение по умолчанию.

Как только это будет сделано, перезапустите браузер, чтобы изменения вступили в силу. Затем попробуйте получить доступ к медиафайлу и проверьте, исправляет ли он ошибку media_err_src_not_supported в вашем браузере Opera.

Эти флаги, несомненно, дают вам возможность протестировать новые и интригующие функции, которые могут или не могут попасть в стабильную сборку. С другой стороны, он также имеет несколько предостережений, поэтому для повседневного использования лучше не взаимодействовать с этими флагами.

Переключиться на другой браузер

Браузер печально известен тем, что не поддерживает определенные кодеки. Кроме того, согласно Модератор форума Opera, есть некоторые коды, которые Opera не может воспроизвести, в первую очередь из-за проблем с лицензированием. В результате он полагается на ОС для воспроизведения этого мультимедиа.

Поэтому, если ваша ОС не может воспроизводить эти кодеки, браузер тоже не сможет этого сделать. В таких случаях выход только один; подумайте о переходе на другой браузер. В связи с этим существует довольно много браузеров Chromium и не-Chromium, которые вы можете попробовать.

Если по какой-то причине вы все еще используете старую версию Opera, мы рекомендуем вам сразу же обновить Opera до последней сборки. Однако, если вы еще не планируете этого делать, есть несколько советов, которые вам нужно иметь в виду.

- Во-первых, обязательно запрещать флаг Encrypted Media на странице Flags браузера.

- Далее также рекомендуется запрещать функция Click to Play.

- Точно так же было бы лучше, если бы вы также рассмотрели отключение функция Opera Turbo также.

Все эти три функции уже удалены из последней сборки браузера. Поэтому, если вы используете последнюю версию, вам больше не нужно искать эти функции, поскольку они уже были обработаны.

Итог: исправление ошибки Opera Media

На этом мы завершаем руководство о том, как исправить ошибку media_err_src_not_supported в браузере Opera. Из пяти исправлений, упомянутых в этом руководстве, комбинация первого и третьего показала мне успех.

Проблема с медиакодеком была устранена путем отключения встроенного приложения VPN и очистки временных данных браузера. Я смог воспроизвести видео без каких-либо проблем.

Связанный: Как использовать бесплатный VPN-сервис Opera на компьютере?

С учетом сказанного, дайте нам знать, какой метод сработал в вашем случае для исправления ошибки MEDIA_ERR_SRC_NOT_SUPPORTED в Opera. Кроме того, если вы пробовали что-то еще, что устранило проблему.

Наконец, вот рекомендуемые веб-браузеры для вашего компьютера и мобильного телефона, которые вам стоит попробовать.

Если у вас есть какие-либо мысли о том, как исправить: MEDIA_ERR_SRC_NOT_SUPPORTED в Opera ?, не стесняйтесь зайти в поле для комментариев ниже. Если вы найдете эту статью полезной, рассмотрите возможность поделиться ею со своей сетью.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

- Pick a username

- Email Address

- Password

By clicking “Sign up for GitHub”, you agree to our terms of service and

privacy statement. We’ll occasionally send you account related emails.

Already on GitHub?

Sign in

to your account

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and

privacy statement. We’ll occasionally send you account related emails.

Already on GitHub?

Sign in

to your account

Closed

hiren3897 opened this issue

Oct 29, 2021

· 4 comments

Comments

Have you read the Tutorials?

yes

Have you read the FAQ and checked for duplicate open issues?

yes

What version of Shaka Player are you using?

latest v3.1.2

Please ask your question

I have created a plugin that fetches our clients URLs and returns various mp4 VOD URLs.

when I do player.load(myplugin:http//url, null, "video/mp4") it throws 3016 error as «MEDIA_ELEMENT_ERROR: Format error»

I have rechecked that the mp4 video URLs are of type MPEG-4

can you please help me to resolve this?

Thanks in advanced.

Hello @hiren3897 ,

If you got and «VIDEO_ERROR» with code 3016, it means the video element reported an error.

error.data[0] is a MediaError code from the video element. On Edge, error.data[1] is a Microsoft extended error code in hex. On Chrome, error.data[2] is a string with details on the error.

It would be great if you share your manifest and the platform information you are using with us, so that we can reproduce and help you debug! Thank you!

If you created your own scheme plugin, it is likely that you have a bug in that plugin. Could you share that plugin? Are you returning MP4 URLs from the plugin, or are you delegating to another request to that URL?

If you «return a URL» from your plugin, then the text of that URL is what is fed to the browser as media. You probably want to create another request to that target URL, and return the response to that request instead.

If you created your own scheme plugin, it is likely that you have a bug in that plugin. Could you share that plugin? Are you

returning MP4 URLs from the plugin, or are you delegating to another request to that URL?

static parse(uri, request, requestType, progressUpdate, options) { const parts = uri.split(':'); const path = parts.slice(1).join(':'); return shaka.util.AbortableOperation.notAbortable(PlayerPlatformPlugin.loadManifest(path, options)) .chain((manifest) => { // returns an mp4 URL and we do HttpFetchPlugin.parse return shaka.net.HttpFetchPlugin.parse(PlayerPlatformPlugin.manifestParser(manifest), request, requestType, progressUpdate); }).chain((response) => { /** @type {shaka.extern.Response} */ const res = { uri: response.uri, originalUri: response.uri, data: response.data, headers: { ...response.headers, 'content-type': mimeType } }; return res; }); }

I also tried to pass a mimeType while loading the player but it gives 3016 and without providing mimeType it gives 4000 error code.

player.load('myplugin:https//url', null, 'video/mp4')

If you «return a URL» from your plugin, then the text of that URL is what is fed to the browser as media. You probably want > to create another request to that target URL, and return the response to that request instead.

Am I doing it the right way?

The same thing goes with my other plugin and it works fine in chrome and firefox on Linux and windows but gives me problems in macOS Safari 4026 and iOS safari URL undefined here is issue #3708

@joeyparrish @michellezhuogg can you please help! me with this error.

My Whole plugin:

import shaka from '../../../node_modules/shaka-player/dist/shaka-player.ui'; import { UAParser } from 'ua-parser-js'; export const PlayerPlatformPlugin = class { /** * @param uri * @param request * @param requestType * @param progressUpdate * @param options * @return {Promise<shaka.util.AbortableOperation<shaka.extern.Response>|shaka.util.AbortableOperation<any>>} */ static parse(uri, request, requestType, progressUpdate, options) { try { const parts = uri.split(':'); const path = parts.slice(1).join(':'); let mimeType; return shaka.util.AbortableOperation.notAbortable(PlayerPlatformPlugin.loadManifest(path, options)) .chain((manifest) => { if (manifest.hls) { mimeType = 'application/x-mpegURL'; } else { mimeType = 'video/mp4'; } return shaka.net.HttpFetchPlugin.parse(PlayerPlatformPlugin.manifestParser(manifest), request, requestType, progressUpdate); }).chain((response) => { /** @type {shaka.extern.Response} */ const res = { uri: response.uri, originalUri: response.uri, data: response.data, headers: { ...response.headers, 'content-type': mimeType } }; console.log(res); return shaka.util.AbortableOperation.completed(res); }); } catch (e) { return shaka.util.AbortableOperation.failed(e); } } /** * Load manifest from playPlatform * source can be a videoId or the full url pointing to the manifest (hls, mpd, etc ...) * */ static async loadManifest(source, playerPlatformConfig = null) { let response; // TODO is it good enough as a test to differentiate full url from videoId if (source.includes('https://') || source.includes('http://')) { response = await fetch(source); } else { // else if videoId match a regex pattern if (playerPlatformConfig) { const endpoint = playerPlatformConfig.endpoint; const publicKey = playerPlatformConfig.publicKey; const hash = playerPlatformConfig.hash; const expiresAt = playerPlatformConfig.expiresAt; response = await fetch(endpoint + '/' + source + '/' + publicKey + '/' + hash + '/' + expiresAt); } // throw new Error(`Incorrect config for PlayerPlatform asset/manifest`); } if (!response.ok) { throw new Error(`Erreur HTTP ! statut : ${response.status}`); } return await response.json(); } /** * PlayerPlatform parser utils. Choose an mp4 video file URL from available in manifest. * @param data * @param maxFormat * @return {*} */ static getMp4Video(playerPlatformManifest, maxFormat) { const listFormat = [720, 540, 480, 360, 240]; for (let i = 0; i <= listFormat.length; i++) { if (listFormat[i] <= maxFormat) { if (typeof (playerPlatformManifest.mp4[listFormat[i]]) !== 'undefined') { return playerPlatformManifest.mp4[listFormat[i]]; } } } throw new Error('Erreur getMp4Video'); } /** * Player platform manifest parser * Choose between hls and mp4 file. * @param playerPlatformManifest * @return {*} */ static manifestParser(playerPlatformManifest) { const ua = (new UAParser()).getResult(); if (typeof (playerPlatformManifest.hls) !== 'undefined') { return playerPlatformManifest.hls; } /** * Arbitrary resolution chosen for device type * Unable to add the list of mp4 in the resolution select of shaka player */ if (typeof (playerPlatformManifest.mp4) !== 'undefined') { if (ua.device.type === 'mobile') { return this.getMp4Video(playerPlatformManifest, 480); } else if (ua.device.type === 'tablet') { return this.getMp4Video(playerPlatformManifest, 540); } else { return this.getMp4Video(playerPlatformManifest, 720); } } throw new Error('Erreur selectFlux'); } };

Thanks

Содержание

- Как быстро исправить ошибку HTML5

- Что значит ошибка HTML5

- Как исправить ошибку HTML5 в видеоплеере

- Дополнительные способы устранения ошибки HTML5

- MEDIA_ELEMENT_ERROR: Format error #3722

- Comments

- Как исправить: MEDIA_ERR_SRC_NOT_SUPPORTED в Opera?

- Отключить Opera VPN

- Включить трекеры

- Очистить кеш и данные

- Отключить флаги мультимедиа

- Переключиться на другой браузер

- Твики для старых сборок Opera

- Итог: исправление ошибки Opera Media

Как быстро исправить ошибку HTML5

Благодаря неустанному развитию технологий сравнительно недавно появился стандарт HTML5, являющийся новой версией языка разметки веб-страниц, который позволяет структурировать и отображать их содержимое. Инновации позволили усовершенствовать процесс создания и управления графическими и мультимедийными элементами, упростив работу с объектами. Стандарт HTML5 наделён множеством плюсов и предоставляет большие возможности в организации веб-среды, но и здесь не обошлось без сбоев в работе. Пользователи при просмотре видео с интернет ресурсов могут сталкиваться с принудительной остановкой воспроизведения, которую вызвала HTML5 ошибка. Обычно обновление страницы с повторной загрузкой контента решает проблему, но не всегда. К тому же такие сбои особенно неприятны в случае с лимитированным интернетом.

Устранение ошибок в работе HTML5.

Что значит ошибка HTML5

С внедрением HTML5 необходимость использования специальных плагинов, таких как Adobe Flash, Quick Time и прочих надстроек, являющих собой преобразователи цифрового контента в видео и звук, полностью отпала. Больше не нужно скачивать подобные расширения к браузерам или кодеки для просмотра медиаконтента. Обозреватель способен справиться с воспроизведением роликов собственными средствами без использования каких-либо дополнений. Это обусловлено реализацией в HTML5 симбиоза HTML, CSS и JavaScript, где медиаконтент является частью кода веб-страницы. Теперь размещение медиафайлов выполняется стандартными тэгами, при этом элементы могут быть различных форматов и использовать разные кодеки. С приходом новой версии языка разметки, с 2013 года под него велись разработки приложений, постепенно HTML5 стал применяться на большинстве популярных ресурсах и на сегодняшний день является основным стандартом. Технология считается намного усовершенствованной, чем используемая ранее, и сбои для неё не характерны. При этом пользователей нередко беспокоит проблема невозможности просмотра контента в сети и многим уже знаком сбой «Uppod HTML5: Ошибка загрузки» в плеере с поддержкой стандарта или «HTML5: файл видео не найден». Такие неполадки возникают по разным причинам, среди которых чаще всего виновниками являются следующие:

- Устаревшая версия интернет-обозревателя;

- Случайный сбой в работе браузера;

- Неполадки, проведение технических работ на сервере;

- Негативное воздействие сторонних расширений или приложений.

Современные видеоплееры с поддержкой технологии внедрены сегодня на большинстве веб-сайтов, но проблема всё ещё актуальна, поскольку на полный переход к новому стандарту видимо требуется больше времени. Так, на данный момент решать вопрос придётся своими силами.

Как исправить ошибку HTML5 в видеоплеере

Устранить проблему достаточно просто, для этого нужно избавиться от причины, провоцирующей сбой. Рассмотрим, как исправить ошибку HTML5 несколькими способами:

- В первую очередь следует обновить страницу, при случайных сбоях эффективен именно этот вариант решения;

- Можно также изменить качество воспроизводимого видео (выбрать другое разрешение в настройках плеера);

- Стоит попробовать обновить браузер. Когда на сайте стоит плеер HTML5, а версия обозревателя не поддерживает стандарт, возникает данная ошибка и тогда решение очевидно. Посмотреть наличие обновлений для вашего браузера можно в его настройках. По понятным причинам скачивать свежие обновления рекомендуется с официального сайта. Иногда для корректной работы программы с новой технологией может потребоваться переустановить браузер вручную (полное удаление с последующей установкой последней версии);

- Обозреватель следует время от времени чистить от накопившего мусора. На разных браузерах кэш и cookies очищаются по-разному, как правило, опция находится в настройках программы. Есть возможность также выбрать временной период, за который будут удалены данные, лучше чистить за весь период.

Для проверки, в браузере ли дело или же присутствует другая причина ошибки HTML5, нужно попробовать запустить то же видео посредством иного обозревателя. Это может также стать временной мерой по решению проблемы, но если отказываться от привычной программы нет желания, а сбой проявляется на постоянной основе, помочь сможет обновление или переустановка софта.

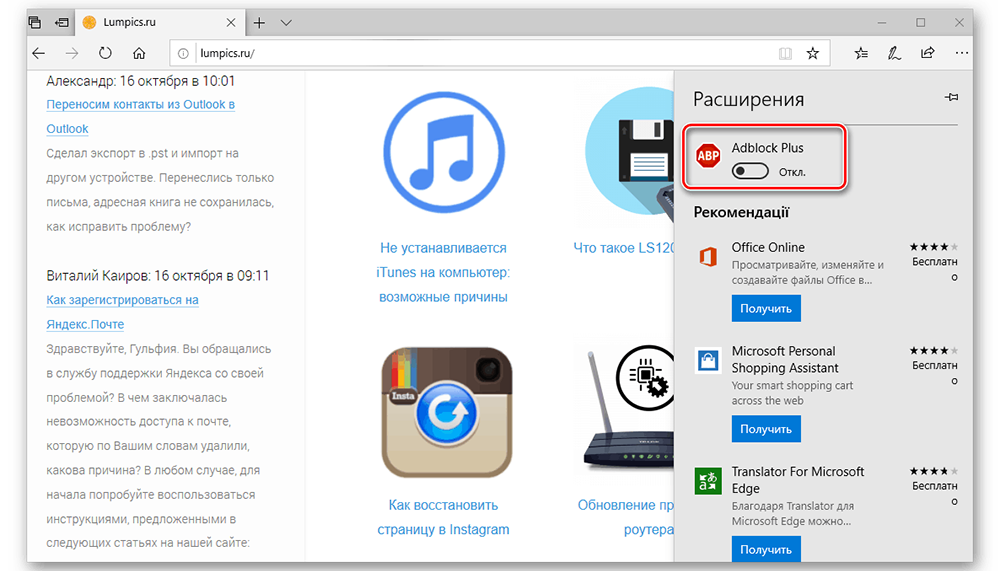

Дополнительные способы устранения ошибки HTML5

Корректному воспроизведению видео в плеере с поддержкой стандарта могут препятствовать и расширения, присутствующие в браузере. В особенности нередко блокирует медиаконтент инструмент Adbloker. Чтобы деактивировать сторонние плагины нужно перейти в настройках браузера в раздел Дополнения, где вы увидите полный список имеющихся расширений, которые могут помешать воспроизведению файлов, и остановить их работу. В некоторых случаях проблему способен спровоцировать чрезмерно бдительный антивирус или FireWall, ведущие активную защиту при работе с ресурсами сети. Блокировка нежелательного, по мнению программы, трафика приводит к прекращению загрузки контента. Временное отключение софта, блокирующего подключение, решает проблему.

Иногда возможны и проблемы с ресурсами (возникли неполадки с хостингом, ведутся технические работы, обрушилась DDOS атака и т. д.). Тогда придётся просто выждать немного времени, поскольку, когда ошибки возникают на стороне сервера, вы с этим поделать ничего не можете, разве что, сообщить об ошибке администрации сайта. В качестве варианта временного исправления ошибки HTML5, можно переключиться на Adobe Flash, если ресурсом поддерживается сия возможность. Некоторые сайты могут выполнить это автоматически в случае отсутствия поддержки браузером современного стандарта. Рассмотренные способы решения проблемы достаточно эффективны и обязательно помогут в зависимости от причины возникших неудобств с просмотром медиаконтента.

Источник

Have you read the Tutorials?

yes

Have you read the FAQ and checked for duplicate open issues?

yes

What version of Shaka Player are you using?

latest v3.1.2

Please ask your question

I have created a plugin that fetches our clients URLs and returns various mp4 VOD URLs.

when I do player.load(myplugin:http//url, null, «video/mp4») it throws 3016 error as «MEDIA_ELEMENT_ERROR: Format error»

I have rechecked that the mp4 video URLs are of type MPEG-4

can you please help me to resolve this?

Thanks in advanced.

The text was updated successfully, but these errors were encountered:

If you got and «VIDEO_ERROR» with code 3016, it means the video element reported an error.

error.data[0] is a MediaError code from the video element. On Edge, error.data[1] is a Microsoft extended error code in hex. On Chrome, error.data[2] is a string with details on the error.

It would be great if you share your manifest and the platform information you are using with us, so that we can reproduce and help you debug! Thank you!

If you created your own scheme plugin, it is likely that you have a bug in that plugin. Could you share that plugin? Are you returning MP4 URLs from the plugin, or are you delegating to another request to that URL?

If you «return a URL» from your plugin, then the text of that URL is what is fed to the browser as media. You probably want to create another request to that target URL, and return the response to that request instead.

If you created your own scheme plugin, it is likely that you have a bug in that plugin. Could you share that plugin? Are you

returning MP4 URLs from the plugin, or are you delegating to another request to that URL?

I also tried to pass a mimeType while loading the player but it gives 3016 and without providing mimeType it gives 4000 error code.

If you «return a URL» from your plugin, then the text of that URL is what is fed to the browser as media. You probably want > to create another request to that target URL, and return the response to that request instead.

Am I doing it the right way?

The same thing goes with my other plugin and it works fine in chrome and firefox on Linux and windows but gives me problems in macOS Safari 4026 and iOS safari URL undefined here is issue #3708

Источник

Браузер Opera поддерживает воспроизведение мультимедиа, однако не все форматы мультимедиа поддерживаются браузером, и иногда исходный сайт не позволяет воспроизведение. Когда мы пытаемся воспроизвести эти ограниченные форматы файлов или источники в Opera, выдается ошибка «MEDIA_ERR_SRC_NOT_SUPPORTED». Мы можем попробовать несколько настроек, чтобы исправить эту ошибку браузера.

Среди старейших браузеров Opera создала для себя огромный сегмент преданных фанатов. И причина того же вряд ли является секретом. Благодаря множеству встроенных функций, плавному пользовательскому интерфейсу и тому факту, что он основан на исходном коде Chromium, все, похоже, творило чудеса для этого.

Однако, когда дело доходит до обработки и воспроизведения встроенных видео с веб-сайта, это, похоже, доставляет пользователям неудобства. Было много жалобы от пользователей относительно их неспособности воспроизвести видео.

Программы для Windows, мобильные приложения, игры — ВСЁ БЕСПЛАТНО, в нашем закрытом телеграмм канале — Подписывайтесь:)

Когда они пытаются это сделать, вместо этого их приветствует сообщение, в котором говорится: «Это видео либо недоступно, либо не поддерживается в этом браузере. Наряду с этим браузер Opera также выдает код ошибки: MEDIA_ERR_SRC_NOT_SUPPORTED.

Связанный: Как очистить историю, файлы cookie и кеш в браузере Opera?

Что ж, если вы тоже сталкиваетесь с этой проблемой, не волнуйтесь. В этом руководстве мы поделились несколькими изящными обходными путями, которые помогут вам исправить эту ошибку. Итак, без лишних слов, давайте проверим их.

Отключить Opera VPN

Opera поставляется со встроенным VPN, который позволяет направлять сетевой трафик через чужой сервер и анонимно просматривать веб-страницы. Однако в некоторых случаях веб-сайт может не отправлять данные исходному клиенту, если он работает в виртуальной частной сети.

Поэтому, если вы включили встроенный в браузер VPN-сервис, лучше отключить его сейчас.

Вот шаги, чтобы отключить VPN-соединение в браузере Opera:

- Запустите браузер Opera на компьютере.

- Щелкните значок VPN, расположенный слева от адресной строки.

- Отключите переключатель рядом с VPN Connected, и ваша задача будет выполнена.

Теперь попробуйте воспроизвести видео по вашему выбору и проверьте, была ли устранена основная проблема.

В то время как VPN поставляется с множеством плюсов, включая тот факт, что вы можете получить доступ к контенту с географическим ограничением, некоторые веб-сайты могут не работать правильно, если у вас включен VPN. Так что лучше держать эту виртуальную сеть выключенной, пока вы взаимодействуете с такими сайтами.

Включить трекеры

Трекеры следят за вашими ежедневными действиями в браузере, чтобы они могли отправлять вам персонализированный контент. Однако многие пользователи видят в этом угрозу конфиденциальности и предпочитают отключать их.

Но это может, хотя и редко, привести к взлому некоторых сайтов. Поэтому вы можете рассмотреть возможность включения этих трекеров, чтобы они могли выполнять свои функции по умолчанию.

Вот шаги, чтобы включить функционал трекера в браузере Opera:

- Запустите браузер Opera на вашем ПК.

- Нажмите на меню расположен вверху справа.

- Прокрутите до Конфиденциальность и безопасность раздел.

- Отключите переключатель блокировки трекеров.

Это отключит блокировку трекера в опере. Теперь обновите сайт, который не может воспроизвести видео, и проверьте, исправляет ли он ошибку Media_err_src_not_supported в Opera.

Включение трекеров может означать менее безопасную среду просмотра, поэтому вам придется принять этот компромисс соответственно.

Очистить кеш и данные

Всегда полезно сохранить как минимум временные данные. Они могут не только замедлить работу всего браузера, но также могут иметь негативные последствия для правильной загрузки некоторых сайтов. Поэтому было бы лучше, если бы вы рассматривали возможность удаления кеша и данных браузера через регулярные промежутки времени.

Вот шаги, чтобы очистить кеш и данные cookie в браузере Opera:

- Запустите компьютерный браузер Opera.

- Нажмите сочетание клавиш Ctrl + Shift + Del.

Откроется диалоговое окно «Очистить данные просмотра». - Установите флажки «Файлы cookie и другие данные сайта» и установите флажки «Кэшированные изображения и файлы».

- Нажмите «Очистить данные» и дождитесь завершения процесса.

Попробуйте воспроизвести соответствующее видео и проверьте, решена ли проблема.

Очистка этих данных может вывести вас из различных сайтов, но это всего лишь одноразовый сценарий. Как только вы войдете на сайты, данные cookie будут снова заполнены.

В Opera есть отдельный раздел «Флаги», который используется для тестирования экспериментальных функций. Многие технические энтузиасты обычно пытаются опробовать новейшие передовые функции раньше всех.

Однако, будучи экспериментальной, флаги, вероятно, могут нарушить нормальную работу браузера. Поэтому, если вы включили любой такой флаг, принадлежащий домену Media, подумайте о том, чтобы вернуть его в состояние по умолчанию.

Вот шаги, чтобы настроить флаги браузера Opera:

- Запустите браузер Opera на компьютере.

- Откройте страницу opera: // flags / в браузере.

- Печатать СМИ в строке поиска.

Он внесет все флаги, связанные с мультимедиа, убедитесь, что для всех таких флагов установлено значение по умолчанию.

- Если вы видите что-либо как Включено или же Отключено, затем измените его на значение по умолчанию.

Как только это будет сделано, перезапустите браузер, чтобы изменения вступили в силу. Затем попробуйте получить доступ к медиафайлу и проверьте, исправляет ли он ошибку media_err_src_not_supported в вашем браузере Opera.

Эти флаги, несомненно, дают вам возможность протестировать новые и интригующие функции, которые могут или не могут попасть в стабильную сборку. С другой стороны, он также имеет несколько предостережений, поэтому для повседневного использования лучше не взаимодействовать с этими флагами.

Переключиться на другой браузер

Браузер печально известен тем, что не поддерживает определенные кодеки. Кроме того, согласно Модератор форума Opera, есть некоторые коды, которые Opera не может воспроизвести, в первую очередь из-за проблем с лицензированием. В результате он полагается на ОС для воспроизведения этого мультимедиа.

Поэтому, если ваша ОС не может воспроизводить эти кодеки, браузер тоже не сможет этого сделать. В таких случаях выход только один; подумайте о переходе на другой браузер. В связи с этим существует довольно много браузеров Chromium и не-Chromium, которые вы можете попробовать.

Твики для старых сборок Opera

Если по какой-то причине вы все еще используете старую версию Opera, мы рекомендуем вам сразу же обновить Opera до последней сборки. Однако, если вы еще не планируете этого делать, есть несколько советов, которые вам нужно иметь в виду.

- Во-первых, обязательно запрещать флаг Encrypted Media на странице Flags браузера.

- Далее также рекомендуется запрещать функция Click to Play.

- Точно так же было бы лучше, если бы вы также рассмотрели отключение функция Opera Turbo также.

Все эти три функции уже удалены из последней сборки браузера. Поэтому, если вы используете последнюю версию, вам больше не нужно искать эти функции, поскольку они уже были обработаны.

На этом мы завершаем руководство о том, как исправить ошибку media_err_src_not_supported в браузере Opera. Из пяти исправлений, упомянутых в этом руководстве, комбинация первого и третьего показала мне успех.

Проблема с медиакодеком была устранена путем отключения встроенного приложения VPN и очистки временных данных браузера. Я смог воспроизвести видео без каких-либо проблем.

Связанный: Как использовать бесплатный VPN-сервис Opera на компьютере?

С учетом сказанного, дайте нам знать, какой метод сработал в вашем случае для исправления ошибки MEDIA_ERR_SRC_NOT_SUPPORTED в Opera. Кроме того, если вы пробовали что-то еще, что устранило проблему.

Наконец, вот рекомендуемые веб-браузеры для вашего компьютера и мобильного телефона, которые вам стоит попробовать.

Если у вас есть какие-либо мысли о том, как исправить: MEDIA_ERR_SRC_NOT_SUPPORTED в Opera ?, не стесняйтесь зайти в поле для комментариев ниже. Если вы найдете эту статью полезной, рассмотрите возможность поделиться ею со своей сетью.

Программы для Windows, мобильные приложения, игры — ВСЁ БЕСПЛАТНО, в нашем закрытом телеграмм канале — Подписывайтесь:)

Источник

Браузер Opera поддерживает воспроизведение мультимедиа, однако не все форматы мультимедиа поддерживаются браузером, и иногда исходный сайт не позволяет воспроизведение. Когда мы пытаемся воспроизвести эти ограниченные форматы файлов или источники в Opera, выдается ошибка «MEDIA_ERR_SRC_NOT_SUPPORTED». Мы можем попробовать несколько настроек, чтобы исправить эту ошибку браузера.

Среди старейших браузеров Opera создала для себя огромный сегмент преданных фанатов. И причина того же вряд ли является секретом. Благодаря множеству встроенных функций, плавному пользовательскому интерфейсу и тому факту, что он основан на исходном коде Chromium, все, похоже, творило чудеса для этого.

Однако, когда дело доходит до обработки и воспроизведения встроенных видео с веб-сайта, это, похоже, доставляет пользователям неудобства. Было много жалобы от пользователей относительно их неспособности воспроизвести видео.

Когда они пытаются это сделать, вместо этого их приветствует сообщение, в котором говорится: «Это видео либо недоступно, либо не поддерживается в этом браузере. Наряду с этим браузер Opera также выдает код ошибки: MEDIA_ERR_SRC_NOT_SUPPORTED.

Связанный: Как очистить историю, файлы cookie и кеш в браузере Opera?

Что ж, если вы тоже сталкиваетесь с этой проблемой, не волнуйтесь. В этом руководстве мы поделились несколькими изящными обходными путями, которые помогут вам исправить эту ошибку. Итак, без лишних слов, давайте проверим их.

Отключить Opera VPN

Opera поставляется со встроенным VPN, который позволяет направлять сетевой трафик через чужой сервер и анонимно просматривать веб-страницы. Однако в некоторых случаях веб-сайт может не отправлять данные исходному клиенту, если он работает в виртуальной частной сети.

Поэтому, если вы включили встроенный в браузер VPN-сервис, лучше отключить его сейчас.

Вот шаги, чтобы отключить VPN-соединение в браузере Opera:

- Запустите браузер Opera на компьютере.

- Щелкните значок VPN, расположенный слева от адресной строки.

- Отключите переключатель рядом с VPN Connected, и ваша задача будет выполнена.

Теперь попробуйте воспроизвести видео по вашему выбору и проверьте, была ли устранена основная проблема.

В то время как VPN поставляется с множеством плюсов, включая тот факт, что вы можете получить доступ к контенту с географическим ограничением, некоторые веб-сайты могут не работать правильно, если у вас включен VPN. Так что лучше держать эту виртуальную сеть выключенной, пока вы взаимодействуете с такими сайтами.

Включить трекеры

Трекеры следят за вашими ежедневными действиями в браузере, чтобы они могли отправлять вам персонализированный контент. Однако многие пользователи видят в этом угрозу конфиденциальности и предпочитают отключать их.

Но это может, хотя и редко, привести к взлому некоторых сайтов. Поэтому вы можете рассмотреть возможность включения этих трекеров, чтобы они могли выполнять свои функции по умолчанию.

Вот шаги, чтобы включить функционал трекера в браузере Opera:

- Запустите браузер Opera на вашем ПК.

- Нажмите на меню расположен вверху справа.

- Прокрутите до Конфиденциальность и безопасность раздел.

- Отключите переключатель блокировки трекеров.

Это отключит блокировку трекера в опере. Теперь обновите сайт, который не может воспроизвести видео, и проверьте, исправляет ли он ошибку Media_err_src_not_supported в Opera.

Включение трекеров может означать менее безопасную среду просмотра, поэтому вам придется принять этот компромисс соответственно.

Очистить кеш и данные

Всегда полезно сохранить как минимум временные данные. Они могут не только замедлить работу всего браузера, но также могут иметь негативные последствия для правильной загрузки некоторых сайтов. Поэтому было бы лучше, если бы вы рассматривали возможность удаления кеша и данных браузера через регулярные промежутки времени.

Вот шаги, чтобы очистить кеш и данные cookie в браузере Opera:

- Запустите компьютерный браузер Opera.

- Нажмите сочетание клавиш Ctrl + Shift + Del.

Откроется диалоговое окно «Очистить данные просмотра». - Установите флажки «Файлы cookie и другие данные сайта» и установите флажки «Кэшированные изображения и файлы».

- Нажмите «Очистить данные» и дождитесь завершения процесса.

Попробуйте воспроизвести соответствующее видео и проверьте, решена ли проблема.

Очистка этих данных может вывести вас из различных сайтов, но это всего лишь одноразовый сценарий. Как только вы войдете на сайты, данные cookie будут снова заполнены.

Отключить флаги мультимедиа

В Opera есть отдельный раздел «Флаги», который используется для тестирования экспериментальных функций. Многие технические энтузиасты обычно пытаются опробовать новейшие передовые функции раньше всех.

Однако, будучи экспериментальной, флаги, вероятно, могут нарушить нормальную работу браузера. Поэтому, если вы включили любой такой флаг, принадлежащий домену Media, подумайте о том, чтобы вернуть его в состояние по умолчанию.

Вот шаги, чтобы настроить флаги браузера Opera:

- Запустите браузер Opera на компьютере.

- Откройте страницу opera: // flags / в браузере.

- Печатать СМИ в строке поиска.

Он внесет все флаги, связанные с мультимедиа, убедитесь, что для всех таких флагов установлено значение по умолчанию. - Если вы видите что-либо как Включено или же Отключено, затем измените его на значение по умолчанию.

Как только это будет сделано, перезапустите браузер, чтобы изменения вступили в силу. Затем попробуйте получить доступ к медиафайлу и проверьте, исправляет ли он ошибку media_err_src_not_supported в вашем браузере Opera.

Эти флаги, несомненно, дают вам возможность протестировать новые и интригующие функции, которые могут или не могут попасть в стабильную сборку. С другой стороны, он также имеет несколько предостережений, поэтому для повседневного использования лучше не взаимодействовать с этими флагами.

Переключиться на другой браузер

Браузер печально известен тем, что не поддерживает определенные кодеки. Кроме того, согласно Модератор форума Opera, есть некоторые коды, которые Opera не может воспроизвести, в первую очередь из-за проблем с лицензированием. В результате он полагается на ОС для воспроизведения этого мультимедиа.

Поэтому, если ваша ОС не может воспроизводить эти кодеки, браузер тоже не сможет этого сделать. В таких случаях выход только один; подумайте о переходе на другой браузер. В связи с этим существует довольно много браузеров Chromium и не-Chromium, которые вы можете попробовать.

Если по какой-то причине вы все еще используете старую версию Opera, мы рекомендуем вам сразу же обновить Opera до последней сборки. Однако, если вы еще не планируете этого делать, есть несколько советов, которые вам нужно иметь в виду.

- Во-первых, обязательно запрещать флаг Encrypted Media на странице Flags браузера.

- Далее также рекомендуется запрещать функция Click to Play.

- Точно так же было бы лучше, если бы вы также рассмотрели отключение функция Opera Turbo также.

Все эти три функции уже удалены из последней сборки браузера. Поэтому, если вы используете последнюю версию, вам больше не нужно искать эти функции, поскольку они уже были обработаны.

Итог: исправление ошибки Opera Media

На этом мы завершаем руководство о том, как исправить ошибку media_err_src_not_supported в браузере Opera. Из пяти исправлений, упомянутых в этом руководстве, комбинация первого и третьего показала мне успех.

Проблема с медиакодеком была устранена путем отключения встроенного приложения VPN и очистки временных данных браузера. Я смог воспроизвести видео без каких-либо проблем.

Связанный: Как использовать бесплатный VPN-сервис Opera на компьютере?

С учетом сказанного, дайте нам знать, какой метод сработал в вашем случае для исправления ошибки MEDIA_ERR_SRC_NOT_SUPPORTED в Opera. Кроме того, если вы пробовали что-то еще, что устранило проблему.

Наконец, вот рекомендуемые веб-браузеры для вашего компьютера и мобильного телефона, которые вам стоит попробовать.

Если у вас есть какие-либо мысли о том, как исправить: MEDIA_ERR_SRC_NOT_SUPPORTED в Opera ?, не стесняйтесь зайти в поле для комментариев ниже. Если вы найдете эту статью полезной, рассмотрите возможность поделиться ею со своей сетью.

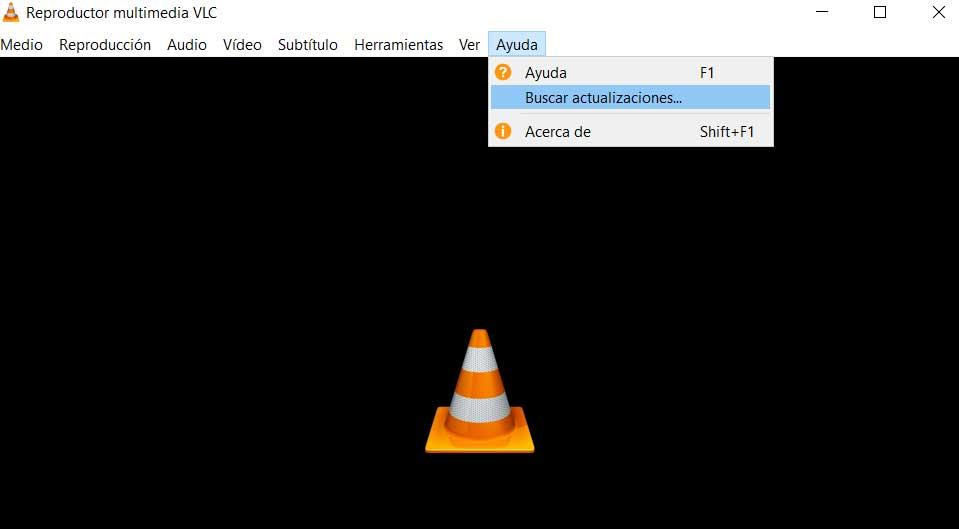

Со временем эти приложения распространились на большинство наших компьютеров, как настольных, так и мобильных. Таким образом, его собственные разработчики отвечали за добавление новых функций, улучшающих работу с программным обеспечением. Стоит отметить, что, как правило, здесь мы находим очень стабильные и эффективные приложения, когда дело касается воспроизведение всевозможного контента.

Если мы примем во внимание широкое использование файлов для видео и музыки прямо сейчас, мы поймем важность этих решений. Несмотря на всю работу, которую разработчики вложили в их улучшение, это вовсе не означает, что они идеальные программы. Одна из основных причин всего этого заключается в том, что им приходится иметь дело с множеством типов файлов, как по формату, так и по размеру и содержанию.

В этом смысле одной из самых страшных ситуаций является то, что программа мультимедийного проигрывателя сама выдает ошибку. ошибка, связанная с форматом при загрузке видео . Это означает, что по какой-то причине программа не может определить, что мы хотим загрузить и воспроизвести. Правда в том, что в нынешних плеерах у нас не должно быть таких проблем с видеоформатами в большинстве случаев, но иногда они случаются. Поэтому ниже мы покажем вам, как действовать в случае необходимости.

Исправить ошибку формата при загрузке контента

Есть несколько аспектов, о которых разработчики этого типа программ стараются как можно больше заботиться. Один из них — стабильность проекта , поскольку в проигрывателе, который время от времени вылетает или закрывается, бесполезен. Кроме того, они включают определенные функции, которые помогают нам улучшить взаимодействие с пользователем. С другой стороны, один из разделов, о которых здесь уделяют больше всего внимания, связан с совместимость со всеми типами форматов , как аудио, так и видео.

Именно поэтому, если мы попадаем в такую ситуацию, первым делом мы должны обновить программу до последней версии. Некоторые из новых форматов могут еще не поддерживаться в старых версиях программного обеспечения. Таким образом, ваше обновление до последней версии должно решить эту проблему с отправленной ошибкой. Кроме того, здесь необходимо учитывать, что когда мы обновить программу , поддерживаемые им кодеки, как правило, также обновляются, что будет очень полезно в этой ситуации.

Мы должны сделать что-то подобное с операционной системой, в которой установлен мультимедийный проигрыватель. Таким образом, это также получит последние кодеки изначально так, чтобы их можно было воспроизвести без каких-либо проблем. В том случае, если мы увидим, что эта проблема не решена соответствующими обновлениями, мы должны принять более радикальное решение. Это могло пройти через искать похожий но более совместимое программное решение, которое может удовлетворить наши потребности в этом отношении.

A vocabulary and associated APIs for HTML and XHTML

W3C Candidate Recommendation 29 April 2014

4.7 Embedded content

4.7.1 The img element

- Categories:

- Flow content.

- Phrasing content.

- Embedded content.

- Form-associated element.

- If the element has a

usemapattribute: Interactive content. - Palpable content.

- Contexts in which this element can be used:

- Where embedded content is expected.

- Content model:

- Empty.

- Content attributes:

- Global attributes

alt— Replacement text for use when images are not availablesrc— Address of the resourcecrossorigin— How the element handles crossorigin requestsusemap— Name of image map to useismap— Whether the image is a server-side image mapwidth— Horizontal dimensionheight— Vertical dimension- Tag omission in text/html:

- No end tag.

- Allowed ARIA role attribute values:

presentationrole only, for an

imgelement whosealtattribute’s value is empty (alt=""), otherwise

any role value.- Allowed ARIA state and property attributes:

- Global aria-* attributes

- Any

aria-*attributes

applicable to the allowed roles. - DOM interface:

-

[NamedConstructor=Image(optional unsigned long width, optional unsigned long height)] interface HTMLImageElement : HTMLElement { attribute DOMString alt; attribute DOMString src; attribute DOMString crossOrigin; attribute DOMString useMap; attribute boolean isMap; attribute unsigned long width; attribute unsigned long height; readonly attribute unsigned long naturalWidth; readonly attribute unsigned long naturalHeight; readonly attribute boolean complete; };

An img element represents an image.

The image given by the src

attributes is the embedded content; the value of

the alt attribute provides equivalent content for

those who cannot process images or who have image loading disabled.

The requirements on the alt attribute’s value are described

in the next section.

The src attribute must be present, and must contain a

valid non-empty URL potentially surrounded by spaces referencing a non-interactive,

optionally animated, image resource that is neither paged nor scripted.

The requirements above imply that images can be static bitmaps (e.g. PNGs, GIFs,

JPEGs), single-page vector documents (single-page PDFs, XML files with an SVG root element),

animated bitmaps (APNGs, animated GIFs), animated vector graphics (XML files with an SVG root

element that use declarative SMIL animation), and so forth. However, these definitions preclude

SVG files with script, multipage PDF files, interactive MNG files, HTML documents, plain text

documents, and so forth. [PNG] [GIF] [JPEG] [PDF] [XML] [APNG] [SVG]

[MNG]

The img element must not be used as a layout tool. In particular, img

elements should not be used to display transparent images, as such images rarely convey meaning and

rarely add anything useful to the document.

The crossorigin attribute is a CORS

settings attribute. Its purpose is to allow images from third-party sites that allow

cross-origin access to be used with canvas.

An img is always in one of the following states:

- Unavailable

- The user agent hasn’t obtained any image data.

- Partially available

- The user agent has obtained some of the image data.

- Completely available

- The user agent has obtained all of the image data and at least

the image dimensions are available. - Broken

- The user agent has obtained all of the image data that it can,

but it cannot even decode the image enough to get the image

dimensions (e.g. the image is corrupted, or the format is not

supported, or no data could be obtained).

When an img element is either in the partially

available state or in the completely available state, it is

said to be available.

An img element is initially unavailable.

When an img element is available, it

provides a paint source whose width is the image’s intrinsic width, whose height is

the image’s intrinsic height, and whose appearance is the intrinsic appearance of the image.

In a browsing context where scripting is

disabled, user agents may obtain images immediately or on demand. In a browsing

context where scripting is enabled, user agents

must obtain images immediately.

A user agent that obtains images immediately must synchronously update the image

data of an img element whenever that element is created with a src attribute.

A user agent that obtains images immediately must also synchronously

update the image data of an img element whenever that element has its

src or crossorigin attribute set, changed, or removed.

A user agent that obtains images on demand must update the image data of an

img element whenever it needs the image data (i.e. on demand), but only if the

img element has a src

attribute, and only if the img element is in the

unavailable state. When an img element’s src or crossorigin attribute set, changed, or removed, if the user

agent only obtains images on demand, the img element must return to the unavailable state.

Each img element has a last selected source, which must initially be

null, and a current pixel density, which must initially be undefined.

When an img element has a current pixel density that is not 1.0, the

element’s image data must be treated as if its resolution, in device pixels per CSS pixels, was

the current pixel density.

For example, if the current pixel density is 3.125, that means

that there are 300 device pixels per CSS inch, and thus if the image data is 300×600, it has an

intrinsic dimension of 96 CSS pixels by 192 CSS pixels.

Each Document object must have a list of available images. Each image

in this list is identified by a tuple consisting of an absolute URL, a CORS

settings attribute mode, and, if the mode is not No

CORS, an origin. User agents may copy entries from one Document

object’s list of available images to another at any time (e.g. when the

Document is created, user agents can add to it all the images that are loaded in

other Documents), but must not change the keys of entries copied in this way when

doing so. User agents may also remove images from such lists at any time (e.g. to save

memory).

When the user agent is to update the image data of an img element, it

must run the following steps:

-

Return the

imgelement to the unavailable

state. -

If an instance of the fetching algorithm is still running for

this element, then abort that algorithm, discarding any pending tasks generated by that algorithm. -

Forget the

imgelement’s current image data, if any. -

If the user agent cannot support images, or its support for images has been disabled, then

abort these steps. -

Otherwise, if the element has a

srcattribute specified and

its value is not the empty string, let selected source be the value of the

element’ssrcattribute, and selected pixel

density be 1.0. Otherwise, let selected source be null and selected pixel density be undefined. -

Let the

imgelement’s last selected source be selected source and theimgelement’s current pixel

density be selected pixel density. -

If selected source is not null, run these substeps:

-

Resolve selected source, relative

to the element. If that is not successful, abort these steps. -

Let key be a tuple consisting of the resulting absolute

URL, theimgelement’scrossorigin

attribute’s mode, and, if that mode is not No CORS,

theDocumentobject’s origin. -

If the list of available images contains an entry for key, then set the

imgelement to the completely

available state, update the presentation of the image appropriately, queue a

task to fire a simple event namedloadat the

imgelement, and abort these steps.

-

-

Asynchronously await a stable state, allowing the task that invoked this algorithm to continue. The synchronous

section consists of all the remaining steps of this algorithm until the algorithm says the

synchronous section has ended. (Steps in synchronous sections are marked with ⌛.) -

⌛ If another instance of this algorithm for this

imgelement was started

after this instance (even if it aborted and is no longer running), then abort these steps.Only the last instance takes effect, to avoid multiple requests when, for

example, thesrc

andcrossoriginattributes are all set in

succession. -

⌛ If selected source is null, then set the element to the broken state, queue a task to fire a simple

event namederrorat theimgelement, and

abort these steps. -

⌛ Queue a task to fire a progress event named

loadstartat

theimgelement. -

⌛ Do a potentially CORS-enabled fetch of the absolute

URL that resulted from the earlier step, with the mode being the current state of the

element’scrossorigincontent attribute, the origin being the origin of theimgelement’s

Document, and the default origin behaviour set to taint.The resource obtained in this fashion, if any, is the

imgelement’s image data.

It can be either CORS-same-origin or CORS-cross-origin; this affects

the origin of the image itself (e.g. when used on acanvas).Fetching the image must delay the load event of the element’s document until the

task that is queued by the

networking task source once the resource has been fetched (defined below) has been run.This, unfortunately, can be used to perform a rudimentary port scan of the

user’s local network (especially in conjunction with scripting, though scripting isn’t actually

necessary to carry out such an attack). User agents may implement cross-origin access control policies that are stricter than those

described above to mitigate this attack, but unfortunately such policies are typically not

compatible with existing Web content.If the resource is CORS-same-origin, each task

that is queued by the networking task source

while the image is being fetched must fire a progress

event namedprogressat theimg

element. -

End the synchronous section, continuing the remaining steps asynchronously,

but without missing any data from the fetch algorithm. -

As soon as possible, jump to the first applicable entry from the following list:

- If the resource type is

multipart/x-mixed-replace -

The next task that is queued by the networking task source while the image is being fetched must set the

imgelement’s state to partially available.Each task that is queued by the networking task source while the image is being fetched must update the presentation of the image, but as each new

body part comes in, it must replace the previous image. Once one body part has been completely

decoded, the user agent must set theimgelement to the completely available state and queue a task to fire

a simple event namedloadat theimg

element.The

progressandloadendevents are not fired for

multipart/x-mixed-replaceimage streams. - If the resource type and data corresponds to a supported image format, as described below

-

The next task that is queued by the networking task source while the image is being fetched must set the

imgelement’s state to partially available.That task, and each subsequent task, that is queued by the

networking task source while the image is being fetched must update the presentation of the image appropriately (e.g. if

the image is a progressive JPEG, each packet can improve the resolution of the image).Furthermore, the last task that is queued by the networking task source once the resource has been

fetched must additionally run the steps for the matching entry in

the following list:- If the download was successful and the user agent was able to determine the image’s width and height

-

-

Set the

imgelement to the completely

available state. -

Add the image to the list of available images using the key key.

-

If the resource is CORS-same-origin: fire a progress event

namedloadat theimgelement.If the resource is CORS-cross-origin: fire a simple event named

loadat theimgelement. -

If the resource is CORS-same-origin: fire a progress event

namedloadendat theimgelement.If the resource is CORS-cross-origin: fire a simple event named

loadendat theimgelement.

-

- Otherwise

-

-

Set the

imgelement to the broken

state. -

If the resource is CORS-same-origin: fire a progress event

namedloadat theimgelement.If the resource is CORS-cross-origin: fire a simple event named

loadat theimgelement. -

If the resource is CORS-same-origin: fire a progress event

namedloadendat theimgelement.If the resource is CORS-cross-origin: fire a simple event named

loadendat theimgelement.

-

- Otherwise

-

Either the image data is corrupted in some fatal way such that the image dimensions cannot

be obtained, or the image data is not in a supported file format; the user agent must set the

imgelement to the broken state, abort the fetching algorithm, discarding any pending tasks generated by that algorithm, and then queue a

task to first fire a simple event namederrorat theimgelement and then fire a simple

event namedloadendat theimg

element.

- If the resource type is

While a user agent is running the above algorithm for an element x, there

must be a strong reference from the element’s Document to the element x, even if that element is not in its

Document.

When an img element is in the completely available

state and the user agent can decode the media data without errors, then the

img element is said to be fully decodable.

Whether the image is fetched successfully or not (e.g. whether the response code was a 2xx code

or equivalent) must be ignored when determining

the image’s type and whether it is a valid image.

This allows servers to return images with error responses, and have them

displayed.

The user agent should apply the image sniffing rules to determine the type of the image, with the image’s associated Content-Type headers giving the official

type. If these rules are not applied, then the type of the image must be the type given by

the image’s associated Content-Type headers.

User agents must not support non-image resources with the img element (e.g. XML

files whose root element is an HTML element). User agents must not run executable code (e.g.

scripts) embedded in the image resource. User agents must only display the first page of a

multipage resource (e.g. a PDF file). User agents must not allow the resource to act in an

interactive fashion, but should honor any animation in the resource.

This specification does not specify which image types are to be supported.

What an img element represents depends on the src attribute and the alt

attribute.

- If the

srcattribute is set and thealtattribute is set to the empty string -

The image is either decorative or supplemental to the rest of the content, redundant with

some other information in the document.If the image is available and the user agent is configured

to display that image, then the element represents the element’s image data.Otherwise, the element represents nothing, and may be omitted completely from

the rendering. User agents may provide the user with a notification that an image is present but

has been omitted from the rendering. - If the

srcattribute is set and thealtattribute is set to a value that isn’t empty -

The image is a key part of the content; the

altattribute

gives a textual equivalent or replacement for the image.If the image is available and the user agent is configured

to display that image, then the element represents the element’s image data.Otherwise, the element represents the text given by the

altattribute. User agents may provide the user with a notification

that an image is present but has been omitted from the rendering. - If the

srcattribute is set and thealtattribute is not -

There is no textual equivalent of the image available.

If the image is available and the user agent is configured

to display that image, then the element represents the element’s image data.Otherwise, the user agent should display some sort of indicator that there is an image that

is not being rendered, and may, if requested by the user, or if so configured, or when required

to provide contextual information in response to navigation, provide caption information for the

image, derived as follows:-

If the image is a descendant of a

figureelement that has a child

figcaptionelement, and, ignoring thefigcaptionelement and its

descendants, thefigureelement has noTextnode descendants other

than inter-element whitespace, and no embedded content descendant

other than theimgelement, then the contents of the first such

figcaptionelement are the caption information; abort these steps. -

There is no caption information.

-

- If the

srcattribute is not set and either thealtattribute is set to the empty string or thealtattribute is not set at all -

The element represents nothing.

- Otherwise

-

The element represents the text given by the

altattribute.

The alt attribute does not represent advisory information.

User agents must not present the contents of the alt attribute

in the same way as content of the title attribute.

While user agents are encouraged to repair cases of missing alt attributes, authors must not rely on such behavior. Requirements for providing text to act as an alternative for images are described

in detail below.

The contents of img elements, if any, are ignored for the purposes of

rendering.

The usemap attribute,

if present, can indicate that the image has an associated

image map.

The ismap

attribute, when used on an element that is a descendant of an

a element with an href attribute, indicates by its

presence that the element provides access to a server-side image

map. This affects how events are handled on the corresponding

a element.

The ismap attribute is a

boolean attribute. The attribute must not be specified

on an element that does not have an ancestor a element

with an href attribute.

The img element supports dimension

attributes.

The alt, src

IDL attributes must reflect the

respective content attributes of the same name.

The crossOrigin IDL attribute must

reflect the crossorigin content attribute,

limited to only known values.

The useMap IDL attribute must

reflect the usemap content attribute.

The isMap IDL attribute must reflect

the ismap content attribute.

- image .

width[ = value ] - image .

height[ = value ] -

These attributes return the actual rendered dimensions of the

image, or zero if the dimensions are not known.They can be set, to change the corresponding content

attributes. - image .

naturalWidth - image .

naturalHeight -

These attributes return the intrinsic dimensions of the image,

or zero if the dimensions are not known. - image .

complete -

Returns true if the image has been completely downloaded or if

no image is specified; otherwise, returns false. - image = new

Image( [ width [, height ] ] ) -

Returns a new

imgelement, with thewidthandheightattributes set to the values

passed in the relevant arguments, if applicable.

The IDL attributes width and height must return the rendered width and height of the

image, in CSS pixels, if the image is being rendered, and is being rendered to a

visual medium; or else the intrinsic width and height of the image, in CSS pixels, if the image is

available but not being rendered to a visual medium; or else 0, if

the image is not available. [CSS]

On setting, they must act as if they reflected the respective

content attributes of the same name.

The IDL attributes naturalWidth and naturalHeight must return the intrinsic width and

height of the image, in CSS pixels, if the image is available, or

else 0. [CSS]

The IDL attribute complete must return true if

any of the following conditions is true:

- Both the

srcattribute and thesrcsetattribute are omitted. - The

srcsetattribute is omitted and thesrcattribute’s value is the empty string. - The final task that is queued by the networking task source once the resource has been fetched has been queued.

- The

imgelement is completely available. - The

imgelement is broken.

Otherwise, the attribute must return false.

The value of complete can thus change while

a script is executing.

A constructor is provided for creating HTMLImageElement objects (in addition to

the factory methods from DOM such as createElement()): Image(width, height).

When invoked as a constructor, this must return a new HTMLImageElement object (a new

img element). If the width argument is present, the new object’s

width content attribute must be set to width. If the height argument is also present, the new object’s

height content attribute must be set to height. The element’s document must be the active document of the

browsing context of the Window object on which the interface object of

the invoked constructor is found.

4.7.1.1 Requirements for providing text to act as an alternative for images

Text alternatives, [WCAG]

are a primary way of making visual information accessible, because they can be rendered through any

sensory modality (for example, visual, auditory or tactile) to match the needs of the user. Providing text alternatives allows

the information to be rendered in a variety of ways by a variety of user agents. For example, a person who cannot see a picture

can have the text alternative read aloud using synthesized speech.

The alt attribute on images is a very important accessibility attribute

(it is supported by all browsers, most authoring tools and is the most well known accessibility

technique among authors). Useful alt attribute content enables users who are unable

to view images on a page to comprehend and make use of that page as much as those who can.

4.7.1.1.1 Examples of scenarios where users benefit from text alternatives for images

- They have a very slow connection and are browsing with images disabled.

- They have a vision impairment and use text to speech software.

- They have a cognitive impairment and use text to speech software.

- They are using a text-only browser.

- They are listening to the page being read out by a voice Web browser.

- They have images disabled to save on download costs.

- They have problems loading images or the source of an image is wrong.

4.7.1.1.2 General guidelines

Except where otherwise specified, the alt attribute must be specified and its value must not be empty;

the value must be an appropriate functional replacement for the image. The specific requirements for the alt attribute content

depend on the image’s function in the page, as described in the following sections.

To determine an appropriate text alternative it is important to think about why an image is being included in a page.

What is its purpose? Thinking like this will help you to understand what is important about the image for the

intended audience. Every image has a reason for being on a page, because it provides useful information, performs a

function, labels an interactive element, enhances aesthetics or is purely decorative. Therefore, knowing what the image

is for, makes writing an appropriate text alternative easier.

4.7.1.1.3 A link or button containing nothing but an image

When an a element that is a hyperlink, or a button element, has no text content

but contains one or more images, include text in the alt attribute(s) that together convey the purpose of the link or button.

In this example, a user is asked to pick her preferred color

from a list of three. Each color is given by an image, but for

users who cannot view the images,

the color names are included within the alt attributes of the images:

![]()

<ul> <li><a href="red.html"><img src="red.jpeg" alt="Red"></a></li> <li><a href="green.html"><img src="green.jpeg" alt="Green"></a></li> <li><a href="blue.html"><img src="blue.jpeg" alt="Blue"></a></li> </ul>

In this example, a link contains a logo. The link points to the W3C web site from an external site. The text alternative is

a brief description of the link target.

![]()

<a href="http://w3.org"> <img src="images/w3c_home.png" width="72" height="48" alt="W3C web site"> </a>

This example is the same as the previous example, except that the link is on the W3C web site. The text alternative is

a brief description of the link target.

![]()

<a href="http://w3.org"> <img src="images/w3c_home.png" width="72" height="48" alt="W3C home"> </a>

In this example, a link contains a print preview icon. The link points to a version of the page with a

print stylesheet applied. The text alternative is a brief description of the link target.

![]()

<a href="preview.html"> <img src="images/preview.png" width="32" height="30" alt="Print preview."> </a>

In this example, a button contains a search icon. The button submits a search form. The text alternative is a

brief description of what the button does.

![]()

<button> <img src="images/search.png" width="74" height="29" alt="Search"> </button>

In this example, a company logo for the PIP Corporation has been split into the following two images,

the first containing the word PIP and the second with the abbreviated word CO. The images are the

sole content of a link to the PIPCO home page. In this case a brief description of the link target is provided.

As the images are presented to the user as a single entity the text alternative PIP CO home is in the

alt attribute of the first image.

<a href="pipco-home.html"> <img src="pip.gif" alt="PIP CO home"><img src="co.gif" alt=""> </a>

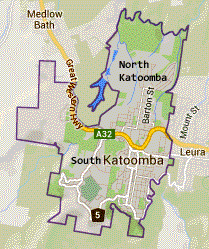

4.7.1.1.4 Graphical Representations: Charts, diagrams, graphs, maps, illustrations

Users can benefit when content is presented in graphical form, for example as a

flowchart, a diagram, a graph, or a map showing directions. Users also benefit when

content presented in a graphical form is also provided in a textual format, these users include

those who are unable to view the image (e.g. because they have a very slow connection,

or because they are using a text-only browser, or because they are listening to the page

being read out by a hands-free automobile voice Web browser, or because they have a

visual impairment and use an assistive technology to render the text to speech).

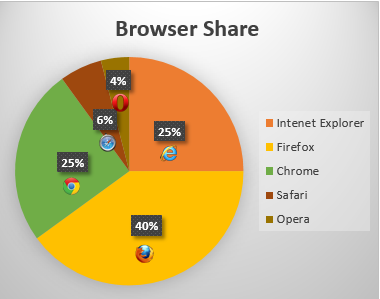

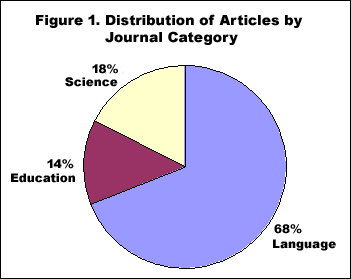

In the following example we have an image of a pie chart, with text in the alt attribute

representing the data shown in the pie chart:

<img src="piechart.gif" alt="Pie chart: Browser Share - Internet Explorer 25%, Firefox 40%, Chrome 25%, Safari 6% and Opera 4%.">

In the case where an image repeats the previous paragraph in graphical form. The alt attribute content labels the image.

<p>According to a recent study Firefox has a 40% browser share, Internet Explorer has 25%, Chrome has 25%, Safari has 6% and Opera has 4%.</p> <p><img src="piechart.gif" alt="Pie chart representing the data in the previous paragraph."></p>

It can be seen that when the image is not available, for example because the src attribute value is incorrect, the text alternative provides the user with a brief description of the image content:

In cases where the text alternative is lengthy, more than a sentence or two, or would benefit from

the use of structured markup, provide a brief description or label using the alt

attribute, and an associated text alternative.

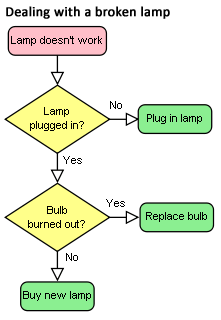

Here’s an example of a flowchart image, with a short text alternative

included in the alt attribute, in this case the text alternative is a description of the link target

as the image is the sole content of a link. The link points to a description, within the same document, of the

process represented in the flowchart.

<a href="#desc"><img src="flowchart.gif" alt="Flowchart: Dealing with a broken lamp."></a> ... ... <div id="desc"> <h2>Dealing with a broken lamp</h2> <ol> <li>Check if it's plugged in, if not, plug it in.</li> <li>If it still doesn't work; check if the bulb is burned out. If it is, replace the bulb.</li> <li>If it still doesn't work; buy a new lamp.</li> </ol> </div>

In this example, there is an image of a chart. It would be inappropriate to provide the information depicted in

the chart as a plain text alternative in an alt attribute as the information is a data set. Instead a

structured text alternative is provided below the image in the form of a data table using the data that is represented

in the chart image.

Indications of the highest and lowest rainfall for each season have been included in the

table, so trends easily identified in the chart are also available in the data table.

Average rainfall in millimetres by country and season.

| United Kingdom | Japan | Australia | |

|---|---|---|---|

| Spring | 5.3 (highest) | 2.4 | 2 (lowest) |

| Summer | 4.5 (highest) | 3.4 | 2 (lowest) |

| Autumn | 3.5 (highest) | 1.8 | 1.5 (lowest) |

| Winter | 1.5 (highest) | 1.2 | 1 (lowest) |

<img src="rainchart.gif" alt="Bar chart: Average rainfall in millimetres by Country and Season."> <table> <caption>Rainfall in millimetres by Country and Season.</caption> <tr><td><th scope="col">UK <th scope="col">Japan<th scope="col">Australia</tr> <tr><th scope="row">Spring <td>5.5 (highest)<td>2.4 <td>2 (lowest)</tr> <tr><th scope="row">Summer <td>4.5 (highest)<td>3.4<td>2 (lowest)</tr> <tr><th scope="row">Autumn <td>3.5 (highest) <td>1.8 <td>1.5 (lowest)</tr> <tr><th scope="row">Winter <td>1.5 (highest) <td>1.2 <td>1 lowest</tr> </table>

4.7.1.1.5 Images of text

Sometimes, an image only contains text, and the purpose of the image

is to display text using visual effects and /or fonts. It is strongly

recommended that text styled using CSS be used, but if this is not possible, provide

the same text in the alt attribute as is in the image.

This example shows an image of the text «Get Happy!» written in a fancy multi colored freehand style.

The image makes up the content of a heading. In this case the text alternative for the image is «Get Happy!».

<h1><img src="gethappy.gif" alt="Get Happy!"></h1>

In this example we have an advertising image consisting of text, the phrase «The BIG sale» is

repeated 3 times, each time the text gets smaller and fainter, the last line reads «…ends Friday»

In the context of use, as an advertisement, it is recommended that the image’s text alternative only include the text «The BIG sale»

once as the repetition is for visual effect and the repetition of the text for users who cannot view

the image is unnecessary and could be confusing.

<p><img src="sale.gif" alt="The BIG sale ...ends Friday."></p>

In situations where there is also a photo or other graphic along with the image of text,

ensure that the words in the image text are included in the text alternative, along with any other description

of the image that conveys meaning to users who can view the image, so the information is also

available to users who cannot view the image.

When an image is used to represent a character that cannot otherwise be represented in Unicode,

for example gaiji, itaiji, or new characters such as novel currency symbols, the text alternative

should be a more conventional way of writing the same thing, e.g. using the phonetic hiragana or

katakana to give the character’s pronunciation.

In this example from 1997, a new-fangled currency symbol that looks like a curly E with two

bars in the middle instead of one is represented using an image. The alternative text gives the

character’s pronunication.

Only ![]() 5.99!

5.99!

<p>Only <img src="euro.png" alt="euro ">5.99!

An image should not be used if Unicode characters would serve an identical purpose. Only when

the text cannot be directly represented using Unicode, e.g. because of decorations or because the

character is not in the Unicode character set (as in the case of gaiji), would an image be

appropriate.

If an author is tempted to use an image because their default system font does not

support a given character, then Web Fonts are a better solution than images.

An illuminated manuscript might use graphics for some of its letters. The text alternative in

such a situation is just the character that the image represents.

![]() nce upon a time and a long long time ago…

nce upon a time and a long long time ago…

<p><img src="initials/fancyO.png" alt="O">nce upon a time and a long long time ago...

4.7.1.1.6 Images that include text

Sometimes, an image consists of a graphics such as a chart and associated text.

In this case it is recommended that the text in the image is included in the text alternative.

Consider an image containing a pie chart and associated text. It is recommended wherever

possible to provide any associated text as text, not an image of text.

If this is not possible include the text in the text alternative along with the pertinent information

conveyed in the image.

<p><img src="figure1.gif" alt="Figure 1. Distribution of Articles by Journal Category. Pie chart: Language=68%, Education=14% and Science=18%."></p>

Here’s another example of the same pie chart image,