-

#1

Hi! I use Proxmox on my homeserver for a while now. Currently it holds 2 VMs and 2 LXC Containers. Today i updated containers (PiHole and Unifi Controller) and also Proxmox itself. After that I couldn’t login to web panel. VMs seems work fine as well as Pi Hole. Only the Unifi Controller is unreachable after updates, same as Proxmox web panel.

My Proxmox version:

Code:

proxmox-ve: 5.4-1 (running kernel: 4.15.18-12-pve)

pve-manager: 5.4-7 (running version: 5.4-7/fc10404a)

pve-kernel-4.15: 5.4-2

pve-kernel-4.15.18-14-pve: 4.15.18-39

pve-kernel-4.15.18-12-pve: 4.15.18-36

pve-kernel-4.15.18-10-pve: 4.15.18-32

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-10

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-52

libpve-guest-common-perl: 2.0-20

libpve-http-server-perl: 2.0-13

libpve-storage-perl: 5.0-43

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-3

lxcfs: 3.0.3-pve1

novnc-pve: 1.0.0-3

proxmox-widget-toolkit: 1.0-28

pve-cluster: 5.0-37

pve-container: 2.0-39

pve-docs: 5.4-2

pve-edk2-firmware: 1.20190312-1

pve-firewall: 3.0-22

pve-firmware: 2.0-6

pve-ha-manager: 2.0-9

pve-i18n: 1.1-4

pve-libspice-server1: 0.14.1-2

pve-qemu-kvm: 3.0.1-4

pve-xtermjs: 3.12.0-1

qemu-server: 5.0-53

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.13-pve1~bpo2I read about time sync might be the problem, so i added custom NTP server and reset time sync service, but nothings happened.

Whole linux environment is new for me as well as Proxmox. It would be great if some one give me few hints how to solve my problem.

![]()

mira

Proxmox Staff Member

-

#2

What’s the status of pveproxy? (systemctl status pveproxy.service)

Anything in the logs? (journalctl)

-

#3

What’s the status of pveproxy? (systemctl status pveproxy.service)

Anything in the logs? (journalctl)

Thanks for replay!

Code:

root@serv:~# systemctl status pveproxy.service

● pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2019-05-25 08:29:28 CEST; 1 months 3 days ago

Main PID: 1748 (pveproxy)

Tasks: 4 (limit: 4915)

Memory: 194.2M

CPU: 3min 12.244s

CGroup: /system.slice/pveproxy.service

├─ 1748 pveproxy

├─18891 pveproxy worker

├─18892 pveproxy worker

└─18893 pveproxy worker

Jun 27 11:14:02 serv pveproxy[18893]: proxy detected vanished client connection

Jun 27 11:14:14 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 11:14:32 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 12:22:37 serv pveproxy[18891]: proxy detected vanished client connection

Jun 27 12:40:16 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 13:44:39 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 14:42:32 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 14:43:20 serv pveproxy[18893]: proxy detected vanished client connection

Jun 27 17:40:34 serv pveproxy[18892]: problem with client 192.168.1.6; Connection reset by peer

Jun 27 17:40:53 serv pveproxy[18892]: proxy detected vanished client connectionLog:

Code:

root@serv:~# journalctl

-- Logs begin at Sat 2019-05-25 08:29:18 CEST, end at Thu 2019-06-27 19:22:00 CEST. --

May 25 08:29:18 serv kernel: Linux version 4.15.18-12-pve (build@pve) (gcc version 6.3.0 20170516 (Debian 6.3

May 25 08:29:18 serv kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-4.15.18-12-pve root=/dev/mapper/pve-root

May 25 08:29:18 serv kernel: KERNEL supported cpus:

May 25 08:29:18 serv kernel: Intel GenuineIntel

May 25 08:29:18 serv kernel: AMD AuthenticAMD

May 25 08:29:18 serv kernel: Centaur CentaurHauls

May 25 08:29:18 serv kernel: x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

May 25 08:29:18 serv kernel: x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

May 25 08:29:18 serv kernel: x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

May 25 08:29:18 serv kernel: x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256

May 25 08:29:18 serv kernel: x86/fpu: Enabled xstate features 0x7, context size is 832 bytes, using 'standard

May 25 08:29:18 serv kernel: e820: BIOS-provided physical RAM map:

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000000000000-0x000000000004efff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x000000000004f000-0x000000000004ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000000050000-0x000000000009dfff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x000000000009e000-0x000000000009ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000000100000-0x00000000c6924fff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c6925000-0x00000000c692bfff] ACPI NVS

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c692c000-0x00000000c6d71fff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c6d72000-0x00000000c712afff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c712b000-0x00000000d9868fff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9869000-0x00000000d98f4fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d98f5000-0x00000000d9914fff] ACPI data

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9915000-0x00000000d9a04fff] ACPI NVS

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9a05000-0x00000000d9f7ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9f80000-0x00000000d9ffefff] type 20

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9fff000-0x00000000d9ffffff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000dc000000-0x00000000de1fffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000f8000000-0x00000000fbffffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fec00000-0x00000000fec00fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fed00000-0x00000000fed03fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fed1c000-0x00000000fed1ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fee00000-0x00000000fee00fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000ff000000-0x00000000ffffffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000100000000-0x000000081fdfffff] usable

May 25 08:29:18 serv kernel: NX (Execute Disable) protection: active

May 25 08:29:18 serv kernel: efi: EFI v2.31 by American Megatrends

May 25 08:29:18 serv kernel: efi: ACPI=0xd98fb000 ACPI 2.0=0xd98fb000 SMBIOS=0xd9f7e498 MPS=0xf4e90

May 25 08:29:18 serv kernel: secureboot: Secure boot could not be determined (mode 0)

May 25 08:29:18 serv kernel: SMBIOS 2.7 present.

May 25 08:29:18 serv kernel: DMI: Hewlett-Packard HP EliteDesk 800 G1 SFF/1998, BIOS L01 v02.75 05/04/2018

May 25 08:29:18 serv kernel: e820: update [mem 0x00000000-0x00000fff] usable ==> reserved

May 25 08:29:18 serv kernel: e820: remove [mem 0x000a0000-0x000fffff] usable

May 25 08:29:18 serv kernel: e820: last_pfn = 0x81fe00 max_arch_pfn = 0x400000000

May 25 08:29:18 serv kernel: MTRR default type: uncachable

May 25 08:29:18 serv kernel: MTRR fixed ranges enabled:

May 25 08:29:18 serv kernel: 00000-9FFFF write-back

lines 1-48Last edited: Jun 27, 2019

![]()

mira

Proxmox Staff Member

-

#4

Please post the whole output of ‘journalctl -b’ (you can use ‘journalctl -b > journal.txt’ or something like that to get the complete output in the file ‘journal.txt’).

In addition /etc/hosts and /etc/network/interfaces could be useful as well.

Also the configs of the containers (‘pct config <ctid>’) and VMs (‘qm config <vmid>’).

Any of those can contain public IP addresses you might want to mask.

-

#5

Please post the whole output of ‘journalctl -b’ (you can use ‘journalctl -b > journal.txt’ or something like that to get the complete output in the file ‘journal.txt’).

In addition /etc/hosts and /etc/network/interfaces could be useful as well.

Also the configs of the containers (‘pct config <ctid>’) and VMs (‘qm config <vmid>’).

Any of those can contain public IP addresses you might want to mask.

Hi! Thank you for your replay. Here’s what I’ve found:

— journal: https://drive.google.com/file/d/1XzuR_fXvmd9ZUSetQffiOaHm8Y89TBqX/view?usp=sharing

— /etc/hosts

Code:

127.0.0.1 localhost.localdomain localhost

192.168.1.10 serv.hassio serv

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts— /etc/network/interfaces

Code:

auto lo

iface lo inet loopback

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.1.10

netmask 255.255.255.0

gateway 192.168.1.1

bridge_ports eno1

bridge_stp off

bridge_fd 0— configs of the containers

Code:

root@serv:~# pct config 104

arch: amd64

cores: 1

description: IP 192.168.1.11%0A

hostname: UnifiConroller-production

memory: 4096

net0: name=eth0,bridge=vmbr0,hwaddr=6A:1C:03:BB:F9:24,ip=dhcp,ip6=dhcp,type=veth

onboot: 1

ostype: ubuntu

rootfs: local-lvm:vm-104-disk-0,size=22G

swap: 4096Code:

root@serv:~# pct config 105

arch: amd64

cores: 1

description: IP 192.168.1.12/admin%0AUpdate%3A pihole -up%0A

hostname: Pi-hole

memory: 2048

net0: name=eth0,bridge=vmbr0,hwaddr=56:D1:68:FF:D4:BB,ip=dhcp,ip6=dhcp,type=veth

onboot: 1

ostype: centos

rootfs: local-lvm:vm-105-disk-0,size=8G

swap: 2048— configs of the VMs

Code:

root@serv:~# qm config 100

bootdisk: virtio0

cores: 2

description: IP 192.168.1.14

ide0: local:iso/Windows10pro.iso,media=cdrom,size=3964800K

ide2: local:iso/virtio-win-0.1.141.iso,media=cdrom,size=309208K

memory: 8192

name: Windows-serv

net0: e1000=XX:XX:XX:XX:XX,bridge=vmbr0

numa: 0

onboot: 1

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=b21c0142-b809-45f4-9e04-9ba908177c55

sockets: 1

virtio0: local-lvm:vm-100-disk-0,size=80G

vmgenid: d17dc7dd-a4ba-4a2b-a101-0d81220f099bCode:

root@serv:~# qm config 106

bios: ovmf

bootdisk: sata0

cores: 2

description: 192.168.1.5%3A8123

efidisk0: local-lvm:vm-106-disk-0,size=4M

memory: 2048

name: hassosova-2.11

net0: virtio=XX:XX:XX:XX,bridge=vmbr0

numa: 0

onboot: 1

ostype: l26

sata0: local-lvm:vm-106-disk-1,size=32G

scsihw: virtio-scsi-pci

smbios1: uuid=60959f6c-8fd2-420a-a840-8a0338ce3cb0

sockets: 1

usb0: host=3-3

usb1: host=3-4

vmgenid: 580f3096-6c7b-4a15-a38a-9b15a9fed76e

root@serv:~#

-

#6

Did you changed the realm on the login mask? If not, then try to use the other entry and check it again.

-

#7

Did you changed the realm on the login mask? If not, then try to use the other entry and check it again.

Hi.

I can choose:

— Proxmox VE authentication server

— Linux PAM standard authentication

both doesn’t work.

![]()

mira

Proxmox Staff Member

-

#8

Just to be clear, is the login not working but you can reach the Web GUI? Or is it unreachable?

-

#9

Just to be clear, is the login not working but you can reach the Web GUI? Or is it unreachable?

It’s weird story. The web gui was responsive. I was unnable to login. (login and pass was correct). But to the point — during weekend I had a power cut, so it was hard reset for my server. After boot everything started to work as it should, without any problem. So, still, I have no idea what has happened, but it’s all ok now.

-

#11

@iT!T please, mark as SOLVED

![Smile :) :)]()

OK.

Unfortunately I still don’t know what caused the problem.

-

#12

I just Installed 7.1 a few days ago and I am experiencing this problem

-

#13

I just installed 7.1 right now on top of Debian by following this wiki and I am experiencing this problem.

Rebooting doesn’t help.

Edit: Adding a new user in pve realm works, but I don’t think there should be a reason root@pam doesn’t work.

Last edited: Dec 1, 2021

![]()

mira

Proxmox Staff Member

-

#14

Do you specify `root@pam` as user, or only `root` when trying to login in the GUI?

Does it work via SSH/directly instead of the GUI?

-

#15

A CTRL+F5 in the browser might help sometimes too after an upgrade.

-

#16

Do you specify `root@pam` as user, or only `root` when trying to login in the GUI?

Does it work via SSH/directly instead of the GUI?

I specified only root.

I just tried root@pam, still failed.

It works directly in the console. SSH using root is not enabled for security reasons.

-

#17

A CTRL+F5 in the browser might help sometimes too after an upgrade.

I have done that many times, also my Firefox installation for testing such applications is set to «Always Private (clear everything after each launch)», so I don’t think it’s a browser related problem.

![]()

mira

Proxmox Staff Member

-

#18

Please provide the output of systemctl status pvedaemon, systemctl status pveproxy and your datacenter config (/etc/pve/datacenter.cfg).

Perhaps it’s a keyboard layout issue. Have you tried typing your password in the username field to see if the keyboard layout is wrong?

-

#19

Please provide the output of

systemctl status pvedaemon,systemctl status pveproxyand your datacenter config (/etc/pve/datacenter.cfg).

Perhaps it’s a keyboard layout issue. Have you tried typing your password in the username field to see if the keyboard layout is wrong?

I am sure there is no issue with keyboard layout.

1. I use passwords that only consist lowercase letters and hyphens since this is a test system.

2. I am able to login with the newly manually created user «user@pve» just fine, so I’m sure that is not the issue.

3. I have tried doing that, no character is missed or wrong.

The output of the three commands

Bash:

user@undrafted:~$ sudo systemctl status pvedaemon

● pvedaemon.service - PVE API Daemon

Loaded: loaded (/lib/systemd/system/pvedaemon.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2021-12-01 17:34:43 EST; 11h ago

Process: 1494 ExecStart=/usr/bin/pvedaemon start (code=exited, status=0/SUCCESS)

Main PID: 1759 (pvedaemon)

Tasks: 4 (limit: 4596)

Memory: 143.1M

CPU: 1.719s

CGroup: /system.slice/pvedaemon.service

├─1759 pvedaemon

├─1760 pvedaemon worker

├─1761 pvedaemon worker

└─1762 pvedaemon worker

Dec 01 17:34:38 undrafted systemd[1]: Starting PVE API Daemon...

Dec 01 17:34:43 undrafted pvedaemon[1759]: starting server

Dec 01 17:34:43 undrafted pvedaemon[1759]: starting 3 worker(s)

Dec 01 17:34:43 undrafted pvedaemon[1759]: worker 1760 started

Dec 01 17:34:43 undrafted pvedaemon[1759]: worker 1761 started

Dec 01 17:34:43 undrafted pvedaemon[1759]: worker 1762 started

Dec 01 17:34:43 undrafted systemd[1]: Started PVE API Daemon.

Dec 01 18:38:09 undrafted pvedaemon[1762]: <root@pam> successful auth for user 'user@pve'

Dec 02 04:37:15 undrafted IPCC.xs[1760]: pam_unix(proxmox-ve-auth:auth): authentication failure; logname= uid=0 euid=0 tty= ruser= rhost= user=root

Dec 02 04:37:17 undrafted pvedaemon[1760]: authentication failure; rhost=::ffff:192.168.157.1 user=root@pam msg=Authentication failure

user@undrafted:~$ sudo systemctl status pveproxy

● pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2021-12-01 17:34:46 EST; 11h ago

Process: 1764 ExecStartPre=/usr/bin/pvecm updatecerts --silent (code=exited, status=0/SUCCESS)

Process: 1766 ExecStart=/usr/bin/pveproxy start (code=exited, status=0/SUCCESS)

Process: 9947 ExecReload=/usr/bin/pveproxy restart (code=exited, status=0/SUCCESS)

Main PID: 1768 (pveproxy)

Tasks: 4 (limit: 4596)

Memory: 145.3M

CPU: 6.153s

CGroup: /system.slice/pveproxy.service

├─1768 pveproxy

├─9981 pveproxy worker

├─9982 pveproxy worker

└─9983 pveproxy worker

Dec 02 04:31:30 undrafted pveproxy[1768]: starting 3 worker(s)

Dec 02 04:31:30 undrafted pveproxy[1768]: worker 9981 started

Dec 02 04:31:30 undrafted pveproxy[1768]: worker 9982 started

Dec 02 04:31:30 undrafted pveproxy[1768]: worker 9983 started

Dec 02 04:31:35 undrafted pveproxy[1769]: worker exit

Dec 02 04:31:35 undrafted pveproxy[1771]: worker exit

Dec 02 04:31:35 undrafted pveproxy[1770]: worker exit

Dec 02 04:31:35 undrafted pveproxy[1768]: worker 1770 finished

Dec 02 04:31:35 undrafted pveproxy[1768]: worker 1769 finished

Dec 02 04:31:35 undrafted pveproxy[1768]: worker 1771 finished

user@undrafted:~$ sudo cat /etc/pve/datacenter.cfg

cat: /etc/pve/datacenter.cfg: No such file or directory

user@undrafted:~$

Edit: Oops, sorry, I thought I should use markdown syntax. Will update to use the proper syntax for this forum.

Edit2: Updated

Last edited: Dec 2, 2021

![]()

mira

Proxmox Staff Member

-

#20

Could you provide your user.cfg? (cat /etc/pve/user.cfg)

Когда срок действия SSL-сертификата истек или он по какой-то причине отсутствует,

могут возникнуть проблемы с доступом к узлу Proxmox.

Чтобы устранить проблемы с SSL выполните эту команду на машине Proxmox:

root@proxmox ~ # pvecm updatecerts --force

Эта команда обновит и исправит проблемы, связанные с SSL-сертификатом Proxmox

-

#1

Hi! I use Proxmox on my homeserver for a while now. Currently it holds 2 VMs and 2 LXC Containers. Today i updated containers (PiHole and Unifi Controller) and also Proxmox itself. After that I couldn’t login to web panel. VMs seems work fine as well as Pi Hole. Only the Unifi Controller is unreachable after updates, same as Proxmox web panel.

My Proxmox version:

Code:

proxmox-ve: 5.4-1 (running kernel: 4.15.18-12-pve)

pve-manager: 5.4-7 (running version: 5.4-7/fc10404a)

pve-kernel-4.15: 5.4-2

pve-kernel-4.15.18-14-pve: 4.15.18-39

pve-kernel-4.15.18-12-pve: 4.15.18-36

pve-kernel-4.15.18-10-pve: 4.15.18-32

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-10

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-52

libpve-guest-common-perl: 2.0-20

libpve-http-server-perl: 2.0-13

libpve-storage-perl: 5.0-43

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-3

lxcfs: 3.0.3-pve1

novnc-pve: 1.0.0-3

proxmox-widget-toolkit: 1.0-28

pve-cluster: 5.0-37

pve-container: 2.0-39

pve-docs: 5.4-2

pve-edk2-firmware: 1.20190312-1

pve-firewall: 3.0-22

pve-firmware: 2.0-6

pve-ha-manager: 2.0-9

pve-i18n: 1.1-4

pve-libspice-server1: 0.14.1-2

pve-qemu-kvm: 3.0.1-4

pve-xtermjs: 3.12.0-1

qemu-server: 5.0-53

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.13-pve1~bpo2I read about time sync might be the problem, so i added custom NTP server and reset time sync service, but nothings happened.

Whole linux environment is new for me as well as Proxmox. It would be great if some one give me few hints how to solve my problem.

![]()

mira

Proxmox Staff Member

-

#2

What’s the status of pveproxy? (systemctl status pveproxy.service)

Anything in the logs? (journalctl)

-

#3

What’s the status of pveproxy? (systemctl status pveproxy.service)

Anything in the logs? (journalctl)

Thanks for replay!

Code:

root@serv:~# systemctl status pveproxy.service

● pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2019-05-25 08:29:28 CEST; 1 months 3 days ago

Main PID: 1748 (pveproxy)

Tasks: 4 (limit: 4915)

Memory: 194.2M

CPU: 3min 12.244s

CGroup: /system.slice/pveproxy.service

├─ 1748 pveproxy

├─18891 pveproxy worker

├─18892 pveproxy worker

└─18893 pveproxy worker

Jun 27 11:14:02 serv pveproxy[18893]: proxy detected vanished client connection

Jun 27 11:14:14 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 11:14:32 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 12:22:37 serv pveproxy[18891]: proxy detected vanished client connection

Jun 27 12:40:16 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 13:44:39 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 14:42:32 serv pveproxy[18892]: proxy detected vanished client connection

Jun 27 14:43:20 serv pveproxy[18893]: proxy detected vanished client connection

Jun 27 17:40:34 serv pveproxy[18892]: problem with client 192.168.1.6; Connection reset by peer

Jun 27 17:40:53 serv pveproxy[18892]: proxy detected vanished client connectionLog:

Code:

root@serv:~# journalctl

-- Logs begin at Sat 2019-05-25 08:29:18 CEST, end at Thu 2019-06-27 19:22:00 CEST. --

May 25 08:29:18 serv kernel: Linux version 4.15.18-12-pve (build@pve) (gcc version 6.3.0 20170516 (Debian 6.3

May 25 08:29:18 serv kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-4.15.18-12-pve root=/dev/mapper/pve-root

May 25 08:29:18 serv kernel: KERNEL supported cpus:

May 25 08:29:18 serv kernel: Intel GenuineIntel

May 25 08:29:18 serv kernel: AMD AuthenticAMD

May 25 08:29:18 serv kernel: Centaur CentaurHauls

May 25 08:29:18 serv kernel: x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

May 25 08:29:18 serv kernel: x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

May 25 08:29:18 serv kernel: x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

May 25 08:29:18 serv kernel: x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256

May 25 08:29:18 serv kernel: x86/fpu: Enabled xstate features 0x7, context size is 832 bytes, using 'standard

May 25 08:29:18 serv kernel: e820: BIOS-provided physical RAM map:

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000000000000-0x000000000004efff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x000000000004f000-0x000000000004ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000000050000-0x000000000009dfff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x000000000009e000-0x000000000009ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000000100000-0x00000000c6924fff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c6925000-0x00000000c692bfff] ACPI NVS

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c692c000-0x00000000c6d71fff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c6d72000-0x00000000c712afff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000c712b000-0x00000000d9868fff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9869000-0x00000000d98f4fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d98f5000-0x00000000d9914fff] ACPI data

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9915000-0x00000000d9a04fff] ACPI NVS

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9a05000-0x00000000d9f7ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9f80000-0x00000000d9ffefff] type 20

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000d9fff000-0x00000000d9ffffff] usable

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000dc000000-0x00000000de1fffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000f8000000-0x00000000fbffffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fec00000-0x00000000fec00fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fed00000-0x00000000fed03fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fed1c000-0x00000000fed1ffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000fee00000-0x00000000fee00fff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x00000000ff000000-0x00000000ffffffff] reserved

May 25 08:29:18 serv kernel: BIOS-e820: [mem 0x0000000100000000-0x000000081fdfffff] usable

May 25 08:29:18 serv kernel: NX (Execute Disable) protection: active

May 25 08:29:18 serv kernel: efi: EFI v2.31 by American Megatrends

May 25 08:29:18 serv kernel: efi: ACPI=0xd98fb000 ACPI 2.0=0xd98fb000 SMBIOS=0xd9f7e498 MPS=0xf4e90

May 25 08:29:18 serv kernel: secureboot: Secure boot could not be determined (mode 0)

May 25 08:29:18 serv kernel: SMBIOS 2.7 present.

May 25 08:29:18 serv kernel: DMI: Hewlett-Packard HP EliteDesk 800 G1 SFF/1998, BIOS L01 v02.75 05/04/2018

May 25 08:29:18 serv kernel: e820: update [mem 0x00000000-0x00000fff] usable ==> reserved

May 25 08:29:18 serv kernel: e820: remove [mem 0x000a0000-0x000fffff] usable

May 25 08:29:18 serv kernel: e820: last_pfn = 0x81fe00 max_arch_pfn = 0x400000000

May 25 08:29:18 serv kernel: MTRR default type: uncachable

May 25 08:29:18 serv kernel: MTRR fixed ranges enabled:

May 25 08:29:18 serv kernel: 00000-9FFFF write-back

lines 1-48Last edited: Jun 27, 2019

![]()

mira

Proxmox Staff Member

-

#4

Please post the whole output of ‘journalctl -b’ (you can use ‘journalctl -b > journal.txt’ or something like that to get the complete output in the file ‘journal.txt’).

In addition /etc/hosts and /etc/network/interfaces could be useful as well.

Also the configs of the containers (‘pct config <ctid>’) and VMs (‘qm config <vmid>’).

Any of those can contain public IP addresses you might want to mask.

-

#5

Please post the whole output of ‘journalctl -b’ (you can use ‘journalctl -b > journal.txt’ or something like that to get the complete output in the file ‘journal.txt’).

In addition /etc/hosts and /etc/network/interfaces could be useful as well.

Also the configs of the containers (‘pct config <ctid>’) and VMs (‘qm config <vmid>’).

Any of those can contain public IP addresses you might want to mask.

Hi! Thank you for your replay. Here’s what I’ve found:

— journal: https://drive.google.com/file/d/1XzuR_fXvmd9ZUSetQffiOaHm8Y89TBqX/view?usp=sharing

— /etc/hosts

Code:

127.0.0.1 localhost.localdomain localhost

192.168.1.10 serv.hassio serv

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts— /etc/network/interfaces

Code:

auto lo

iface lo inet loopback

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.1.10

netmask 255.255.255.0

gateway 192.168.1.1

bridge_ports eno1

bridge_stp off

bridge_fd 0— configs of the containers

Code:

root@serv:~# pct config 104

arch: amd64

cores: 1

description: IP 192.168.1.11%0A

hostname: UnifiConroller-production

memory: 4096

net0: name=eth0,bridge=vmbr0,hwaddr=6A:1C:03:BB:F9:24,ip=dhcp,ip6=dhcp,type=veth

onboot: 1

ostype: ubuntu

rootfs: local-lvm:vm-104-disk-0,size=22G

swap: 4096Code:

root@serv:~# pct config 105

arch: amd64

cores: 1

description: IP 192.168.1.12/admin%0AUpdate%3A pihole -up%0A

hostname: Pi-hole

memory: 2048

net0: name=eth0,bridge=vmbr0,hwaddr=56:D1:68:FF:D4:BB,ip=dhcp,ip6=dhcp,type=veth

onboot: 1

ostype: centos

rootfs: local-lvm:vm-105-disk-0,size=8G

swap: 2048— configs of the VMs

Code:

root@serv:~# qm config 100

bootdisk: virtio0

cores: 2

description: IP 192.168.1.14

ide0: local:iso/Windows10pro.iso,media=cdrom,size=3964800K

ide2: local:iso/virtio-win-0.1.141.iso,media=cdrom,size=309208K

memory: 8192

name: Windows-serv

net0: e1000=XX:XX:XX:XX:XX,bridge=vmbr0

numa: 0

onboot: 1

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=b21c0142-b809-45f4-9e04-9ba908177c55

sockets: 1

virtio0: local-lvm:vm-100-disk-0,size=80G

vmgenid: d17dc7dd-a4ba-4a2b-a101-0d81220f099bCode:

root@serv:~# qm config 106

bios: ovmf

bootdisk: sata0

cores: 2

description: 192.168.1.5%3A8123

efidisk0: local-lvm:vm-106-disk-0,size=4M

memory: 2048

name: hassosova-2.11

net0: virtio=XX:XX:XX:XX,bridge=vmbr0

numa: 0

onboot: 1

ostype: l26

sata0: local-lvm:vm-106-disk-1,size=32G

scsihw: virtio-scsi-pci

smbios1: uuid=60959f6c-8fd2-420a-a840-8a0338ce3cb0

sockets: 1

usb0: host=3-3

usb1: host=3-4

vmgenid: 580f3096-6c7b-4a15-a38a-9b15a9fed76e

root@serv:~#-

#6

Did you changed the realm on the login mask? If not, then try to use the other entry and check it again.

-

#7

Did you changed the realm on the login mask? If not, then try to use the other entry and check it again.

Hi.

I can choose:

— Proxmox VE authentication server

— Linux PAM standard authentication

both doesn’t work.

![]()

mira

Proxmox Staff Member

-

#8

Just to be clear, is the login not working but you can reach the Web GUI? Or is it unreachable?

-

#9

Just to be clear, is the login not working but you can reach the Web GUI? Or is it unreachable?

It’s weird story. The web gui was responsive. I was unnable to login. (login and pass was correct). But to the point — during weekend I had a power cut, so it was hard reset for my server. After boot everything started to work as it should, without any problem. So, still, I have no idea what has happened, but it’s all ok now.

-

#11

@iT!T please, mark as SOLVED

OK.

Unfortunately I still don’t know what caused the problem.

-

#12

I just Installed 7.1 a few days ago and I am experiencing this problem

-

#13

I just installed 7.1 right now on top of Debian by following this wiki and I am experiencing this problem.

Rebooting doesn’t help.

Edit: Adding a new user in pve realm works, but I don’t think there should be a reason root@pam doesn’t work.

Last edited: Dec 1, 2021

![]()

mira

Proxmox Staff Member

-

#14

Do you specify `root@pam` as user, or only `root` when trying to login in the GUI?

Does it work via SSH/directly instead of the GUI?

-

#15

A CTRL+F5 in the browser might help sometimes too after an upgrade.

-

#16

Do you specify `root@pam` as user, or only `root` when trying to login in the GUI?

Does it work via SSH/directly instead of the GUI?

I specified only root.

I just tried root@pam, still failed.

It works directly in the console. SSH using root is not enabled for security reasons.

-

#17

A CTRL+F5 in the browser might help sometimes too after an upgrade.

I have done that many times, also my Firefox installation for testing such applications is set to «Always Private (clear everything after each launch)», so I don’t think it’s a browser related problem.

![]()

mira

Proxmox Staff Member

-

#18

Please provide the output of systemctl status pvedaemon, systemctl status pveproxy and your datacenter config (/etc/pve/datacenter.cfg).

Perhaps it’s a keyboard layout issue. Have you tried typing your password in the username field to see if the keyboard layout is wrong?

-

#19

Please provide the output of

systemctl status pvedaemon,systemctl status pveproxyand your datacenter config (/etc/pve/datacenter.cfg).

Perhaps it’s a keyboard layout issue. Have you tried typing your password in the username field to see if the keyboard layout is wrong?

I am sure there is no issue with keyboard layout.

1. I use passwords that only consist lowercase letters and hyphens since this is a test system.

2. I am able to login with the newly manually created user «user@pve» just fine, so I’m sure that is not the issue.

3. I have tried doing that, no character is missed or wrong.

The output of the three commands

Bash:

user@undrafted:~$ sudo systemctl status pvedaemon

● pvedaemon.service - PVE API Daemon

Loaded: loaded (/lib/systemd/system/pvedaemon.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2021-12-01 17:34:43 EST; 11h ago

Process: 1494 ExecStart=/usr/bin/pvedaemon start (code=exited, status=0/SUCCESS)

Main PID: 1759 (pvedaemon)

Tasks: 4 (limit: 4596)

Memory: 143.1M

CPU: 1.719s

CGroup: /system.slice/pvedaemon.service

├─1759 pvedaemon

├─1760 pvedaemon worker

├─1761 pvedaemon worker

└─1762 pvedaemon worker

Dec 01 17:34:38 undrafted systemd[1]: Starting PVE API Daemon...

Dec 01 17:34:43 undrafted pvedaemon[1759]: starting server

Dec 01 17:34:43 undrafted pvedaemon[1759]: starting 3 worker(s)

Dec 01 17:34:43 undrafted pvedaemon[1759]: worker 1760 started

Dec 01 17:34:43 undrafted pvedaemon[1759]: worker 1761 started

Dec 01 17:34:43 undrafted pvedaemon[1759]: worker 1762 started

Dec 01 17:34:43 undrafted systemd[1]: Started PVE API Daemon.

Dec 01 18:38:09 undrafted pvedaemon[1762]: <root@pam> successful auth for user 'user@pve'

Dec 02 04:37:15 undrafted IPCC.xs[1760]: pam_unix(proxmox-ve-auth:auth): authentication failure; logname= uid=0 euid=0 tty= ruser= rhost= user=root

Dec 02 04:37:17 undrafted pvedaemon[1760]: authentication failure; rhost=::ffff:192.168.157.1 user=root@pam msg=Authentication failure

user@undrafted:~$ sudo systemctl status pveproxy

● pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2021-12-01 17:34:46 EST; 11h ago

Process: 1764 ExecStartPre=/usr/bin/pvecm updatecerts --silent (code=exited, status=0/SUCCESS)

Process: 1766 ExecStart=/usr/bin/pveproxy start (code=exited, status=0/SUCCESS)

Process: 9947 ExecReload=/usr/bin/pveproxy restart (code=exited, status=0/SUCCESS)

Main PID: 1768 (pveproxy)

Tasks: 4 (limit: 4596)

Memory: 145.3M

CPU: 6.153s

CGroup: /system.slice/pveproxy.service

├─1768 pveproxy

├─9981 pveproxy worker

├─9982 pveproxy worker

└─9983 pveproxy worker

Dec 02 04:31:30 undrafted pveproxy[1768]: starting 3 worker(s)

Dec 02 04:31:30 undrafted pveproxy[1768]: worker 9981 started

Dec 02 04:31:30 undrafted pveproxy[1768]: worker 9982 started

Dec 02 04:31:30 undrafted pveproxy[1768]: worker 9983 started

Dec 02 04:31:35 undrafted pveproxy[1769]: worker exit

Dec 02 04:31:35 undrafted pveproxy[1771]: worker exit

Dec 02 04:31:35 undrafted pveproxy[1770]: worker exit

Dec 02 04:31:35 undrafted pveproxy[1768]: worker 1770 finished

Dec 02 04:31:35 undrafted pveproxy[1768]: worker 1769 finished

Dec 02 04:31:35 undrafted pveproxy[1768]: worker 1771 finished

user@undrafted:~$ sudo cat /etc/pve/datacenter.cfg

cat: /etc/pve/datacenter.cfg: No such file or directory

user@undrafted:~$

Edit: Oops, sorry, I thought I should use markdown syntax. Will update to use the proper syntax for this forum.

Edit2: Updated

Last edited: Dec 2, 2021

![]()

mira

Proxmox Staff Member

-

#20

Could you provide your user.cfg? (cat /etc/pve/user.cfg)

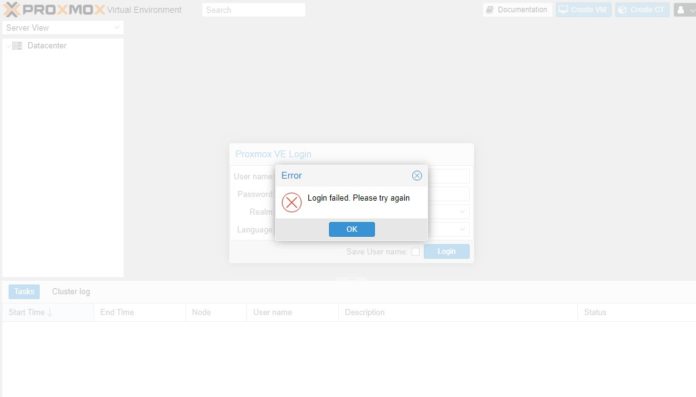

Today we have a quick fix to a Proxmox VE cluster login issue. When you try logging into a Proxmox VE node, it can give you a “Login failed. Please try again” message even if you are using the correct credentials. This can happen when a cluster’s health is less than 100%, so we wanted to show what causes it and the quick fix to let you log in.

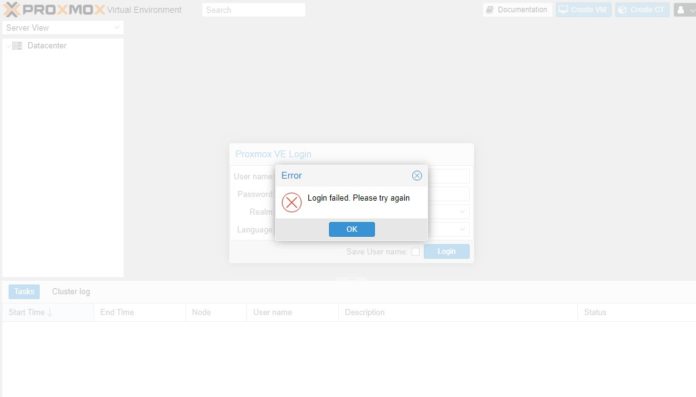

Here is the Error Login failed. Please try again screen that you see on a node that is running, but within the context of a failed cluster. This Error was generated even when supplying the correct user name, password, and ream. VMs on the node were operating normally as well.

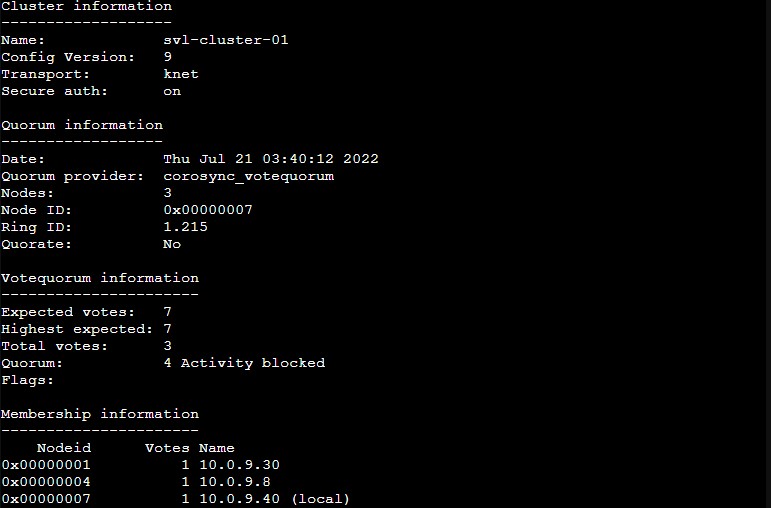

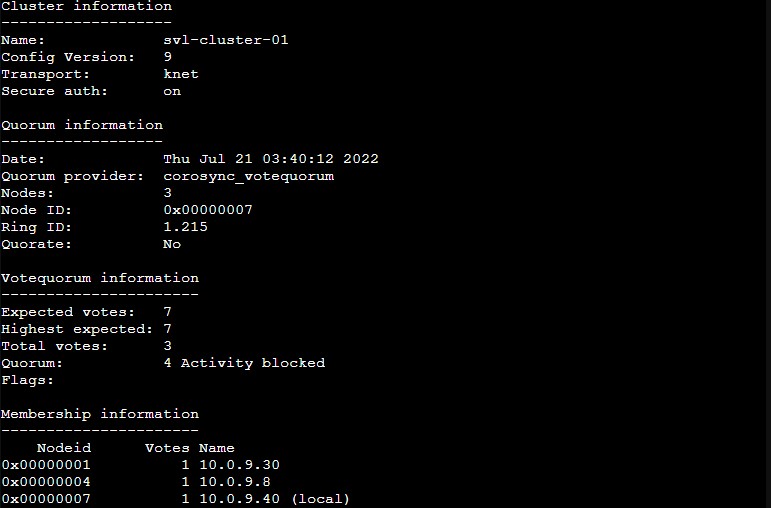

The reason for this is that the cluster is in a degraded state. We created this state while decomissioning a 7-node PVE cluster. With only 3 of 7 nodes online, we did not have a quorum and so even local node authentication was failing.

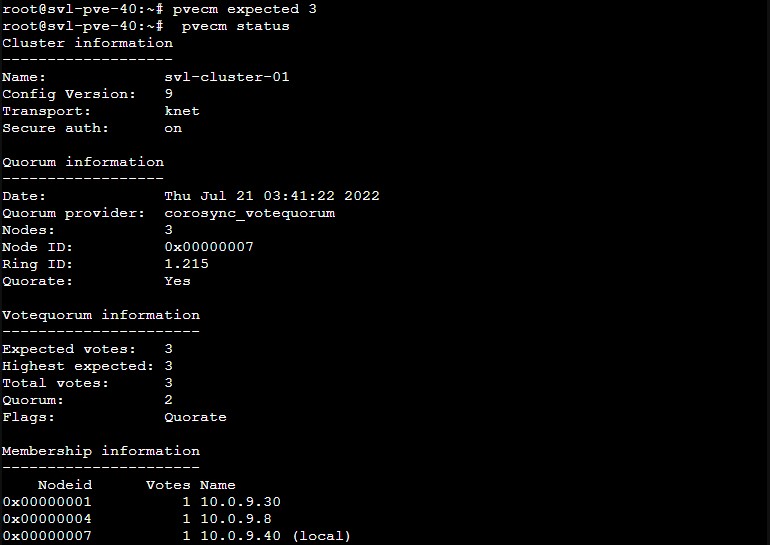

If you search online, you will find “pvecm expected 1” as the fix, fooling the Proxmox VE cluster into thinking you have quorum. This typically works on 3-node clusters, but as the cluster size increases, it does not work.

![]()

Here is the cluster where we can see that we have a 7-node cluster meaning to get a majority of nodes, or a quorum, we need four nodes. As we were taking down the nodes, we had four offline already, and not removed from the cluster. That meant that only 3 of 7 nodes were online and that is not enough for a quorum.

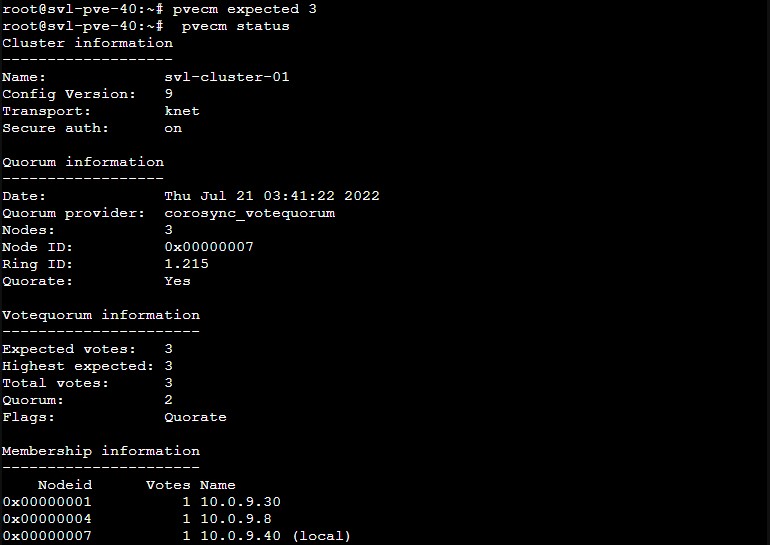

Instead of pvecm expected 1, we can use “pvecm expected 3” to match the three nodes online here, and that again gives us quorum. The expected votes/ highest expected are then 3, and the quorum is then two. With three nodes online, and an exptected value of three, we are able to quorate.

Once this is done, we are able to login to Proxmox VE normally.

Final Words

This is not going to be the most common issue, but is one where the current online guides lead you only to the small cluster answer, rather than one that can work for larger clusters. Ideally, our readers only run into this when taking down a cluster. As a result, we wanted to document the fix for those who run into it in the future. It can be a strange feeling to get an error that the login has failed even when using the correct username/ password. Hopefully this helps.

0

3

Задача:

В режиме «Репликация виртуальных машин» работают в кластере 2 ноды. На первой запущены виртуальные машины, на вторую по расписанию вносятся все изменения в заглушенные виртуальные машины. В случае гибели первой планировалось подключиться ко второй и запустить ВМ.

Соответственно при гибели второй всё продолжало работать на первой.

Решение:

Установил Proxmox Virtual Environment 7.2-4 на два компьютера, объединил в кластер в режиме «Репликация виртуальных машин». Делал по https://www.dmosk.ru/miniinstruktions.php?mini=proxmoxve-cluster#delete-node

Сделал ZFS-pool, восстановил из бэкапа на первую ноду ВМ, дал команду репликации, по изменению занятого места увидел, что репликация действительно прошла.

Т.е. всё работает при обеих включенных нодах.

Выхожу из веб-админки, моделирую «гибель» одной ноды, т.е. выдёргиваю сетевой провод.

Всё, на оставшуюся ноду не попасть, пишет: «ошибка входа, попробуйте ещё раз»

При этом по SSH прекрасно подключаюсь.

Вставляю обратно сетевой провод и меня сразу пускает на веб-морду.

HELP!!!:

Заложен ли такой функционал в кластере в режиме «Репликация виртуальных машин»?

Если заложен, то в чём проблема, что не так я делаю?

Если не заложен, то на кой нужна такая репликация?

The error ‘Login to Proxmox host failed’ can happen in different scenarios:

- When you try to access the Proxmox VE management console directly

- While integrating a third party module such as WHMCS into your Proxmox server

- During the management of a cluster of Proxmox nodes

Proxmox would just say “Login failed, please try again” and you may have no idea what went wrong.

Today we’ll see the 4 main reasons why we come across ‘login to Proxmox host failed’ error during our Outsourced Web Hosting Support services and how we fix each of them.

1. Login failed due to SSL problems

The default URL to access the Proxmox VE management console is https://IPaddress:8006/ . If you try to access it without secure protocol, the console will not load.

At times, when the SSL certificate has expired, there maybe issues accessing the Proxmox node. In some cases, a bug with Proxmox cause it not to detect the SSL settings.

To fix SSL issues, first confirm that the certificate has not expired and is working fine. If all fine, execute this command in the Proxmox machine:

pvecm updatecerts --force

This command will update and fix the issues related to Proxmox SSL certificate and you’d be able to access the node fine.

2. Firewall rules causing login failures

Firewall rules in the Proxmox node can cause login failures. While firewalls are important to secure a server, configuring the rules correctly is vital for proper server functioning.

Proxmox VE 4.x and later uses the following ports:

- Web interface at port 8006

- pvedaemon (listens only on 127.0.0.1) at port 85

- SPICE proxy at port 3128

- sshd at port 22

- rpcbind at port 111

Proxmox server – ports to be allowed in firewall

In Proxmox servers where firewalls such as iptables is used, specifically allow these ports for proper functioning of the Proxmox server.

To allow the Proxmox ports, firewall rules have to be added in the Proxmox server for the corresponding ports:

Allow connections to ports in Proxmox node

For proper internal communication in the Proxmox server, a rule to accept loop-back interface connection should also be added.

Allow connections to Proxmox host loop back interface

In the case of third party modules such as WHMCS, Modulegarden, etc., it is important to ensure that the connectivity is proper between the two servers.

Use telnet command to check the connectivity to Proxmox node from the module server. This helps to know if the login failure is due to any connectivity problems.

Flushing the firewalls fully may resolve connectivity problems, but its not advisable for server security. That makes it important to allow the required connections while denying everything else.

3. Login errors due to incorrect server time

Most servers rely on NTP service to update their server time zone. At times, the NTP server can fail to sync the server time due to connectivity issues or other service related errors.

It can also happen that the server time gets changed to a different time zone, especially in cases when the Proxmox machine is migrated to a different one.

In such cases, the lack of clock synchronization can lead to incorrect server time for the Proxmox installation and login failures.

Proper clock synchronization in the Proxmox server is crucial for its smooth functioning. Fixing the NTP server and keeping the server time updated, would help fix the login errors.

4. Failed to login due to password issues

To access the Proxmox VE management console, use the same login / password of your proxmox shell defined during your proxmox installation.

While it is advisable to use strong passwords for security, it is also noticed at times that too complicated passwords with a lot of special characters can cause login issues.

Password issues can happen be due to some bug with the Proxmox authentication module used to validate the login details.

So if none of the above mentioned fixes work, then its a good move to try resetting the node passwords to simpler ones and try to login.

Read: Proxmox change IP address in 3 simple steps

In short..

Here we saw the main four reasons why login to Proxmox node fails and how to fix them. Performing each fix should be done with caution to avoid any mess up.

Login to Proxmox host failed errors can also happen due to various other reasons, ranging from browser cookies to issues with the storage devices.

We also give recommendations to server owners on how to manage their server resources effectively. If you’d like to know how to do it for your servers, we’d be happy to talk to you.

Read: How to add multiple IP addresses in Proxmox LXC containers

Get an EXPERT consultation

Do you spend all day answering technical support queries?

Wish you had more time to focus on your business? Let us help you.

We free up your time by taking care of your customers and servers. Our engineers monitor your servers 24/7, and support your customers over help desk, live chat and phone.

Talk to our technical support specialist today to know how we can keep your service top notch!

TALK TO AN EXPERT NOW!

var google_conversion_label = «owonCMyG5nEQ0aD71QM»;

Bobcares provides Outsourced Hosting Support for online businesses. Our services include Outsourced Web Hosting Support, Outsourced Server Support, Outsourced Help Desk Support, Outsource Live Chat Support and Phone Support Services.

12

Посты

2

Пользователи

0

Likes

19.5 Тыс.

Просмотры

(@goprog)

Active Member

Присоединился: 4 года назад

Всем добрый день

Не открывается web интерфейс, белая страница.

proxmox-ve: 5.2-2 (running kernel: 4.15.17-1-pve)

pve-manager: 5.2-1 (running version: 5.2-1/0fcd7879)

очистка кэша браузера, другие браузеры та же картина

systemctl restart pveproxy — без результата

# netstat -an | grep 8006

tcp 0 0 0.0.0.0:8006 0.0.0.0:* LISTEN

ssh работает, виртуальные машины работают, мобильная версия открывается

![]()

(@stalker_slx)

Estimable Member

Присоединился: 4 года назад

@goprog

Для начала попробуйте в браузере ввести IP-адрес Вашего proxmox вместо DNS-имени, чтобы получилось так (192.168.10.152 замените на свой IP-адрес):

https://192.168.10.152:8006

![]()

(@stalker_slx)

Estimable Member

Присоединился: 4 года назад

@goprog

Если Вы не установили на свой proxmox сертификат — купленный или выпущенный своими силами и добавлен на Ваш компьютер в корневые сертификаты, то в браузере «Opera» появится сообщение :«Ваше подключение не является приватным. Не удалось подтвердить, что это сервер. Операционная система компьютера не доверяет его сертификату безопасности. Возможно, сервер настроен неправильно или кто-то пытается перехватить ваши данные. NET:ERR_CERT_AUTHORITY_INVALID».

Дальше у Вас будет две кнопки «Не продолжать» и «Помогите мне разобраться» — нажимаем вторую., а потом — «Перейти к 192.168.10.152 (небезопасно)»

Ну, а дальше уже Вас встретить знакомый Вам веб-интерфейс proxmox, где нужно вводить свои данные для авторизации — логин и пароль.

Если Вы используете иной браузер, то текст приведённого выше сообщения может быть другим, но суть и действия будут такими же.

![]()

(@stalker_slx)

Estimable Member

Присоединился: 4 года назад

@goprog

Похоже, что у Вашего proxmox некоторые системные файлы повреждены — попробуйте в его консоли (через SSH) обновить систему:

apt update && apt upgrade

А потом исправить все зависимости пакетов:

apt install -f

Это сообщение было изменено 4 года назад от STALKER_SLX

![]()

(@stalker_slx)

Estimable Member

Присоединился: 4 года назад

Если проблема останется, тогда выполните в терминале последовательно три команды:

apt dist-upgrade

pvecm updatecerts —force

service pveproxy restart

Это сообщение было изменено 4 года назад 3 раз от STALKER_SLX

/Заметки /Login failed в ProxMox хотя через SSH доступ есть

После обновления сертификатов возможна ситуация когда невозможно залогиниться в ProxMox через UI. Возникает ошибка Login failed.

Как исправить:

- Переустановить сертификаты

- Проверить наличие файла /etc/pve/pve-www.key. Если файла нет, его нужно восстановить, это приватный ключ сервера.

Похожее

Today we have a quick fix to a Proxmox VE cluster login issue. When you try logging into a Proxmox VE node, it can give you a “Login failed. Please try again” message even if you are using the correct credentials. This can happen when a cluster’s health is less than 100%, so we wanted to show what causes it and the quick fix to let you log in.

Here is the Error Login failed. Please try again screen that you see on a node that is running, but within the context of a failed cluster. This Error was generated even when supplying the correct user name, password, and ream. VMs on the node were operating normally as well.

The reason for this is that the cluster is in a degraded state. We created this state while decomissioning a 7-node PVE cluster. With only 3 of 7 nodes online, we did not have a quorum and so even local node authentication was failing.

If you search online, you will find “pvecm expected 1” as the fix, fooling the Proxmox VE cluster into thinking you have quorum. This typically works on 3-node clusters, but as the cluster size increases, it does not work.

![]()

Here is the cluster where we can see that we have a 7-node cluster meaning to get a majority of nodes, or a quorum, we need four nodes. As we were taking down the nodes, we had four offline already, and not removed from the cluster. That meant that only 3 of 7 nodes were online and that is not enough for a quorum.

Instead of pvecm expected 1, we can use “pvecm expected 3” to match the three nodes online here, and that again gives us quorum. The expected votes/ highest expected are then 3, and the quorum is then two. With three nodes online, and an exptected value of three, we are able to quorate.

Once this is done, we are able to login to Proxmox VE normally.

Final Words

This is not going to be the most common issue, but is one where the current online guides lead you only to the small cluster answer, rather than one that can work for larger clusters. Ideally, our readers only run into this when taking down a cluster. As a result, we wanted to document the fix for those who run into it in the future. It can be a strange feeling to get an error that the login has failed even when using the correct username/ password. Hopefully this helps.

I’ve just got a powerful dedicated server, and I’d like to virtualize it. The idea is to install Proxmox VE on the host and then create a VM for each use: one for my website, one for mi Git repo, and so on.

I just began fiddling around with iptables, and I have to admit I’m having a bad time. I composed a tiny script:

#!/bin/bash

# Empty any existing rule

iptables -F

iptables -t nat -F

iptables -t mangle -F

# Remove personnal chains

iptables -X

iptables -t nat -X

iptables -t mangle -X

# Enable ESTABLISHED and RELATED communications, accepts answers

iptables -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT

iptables -A OUTPUT -m state --state ESTABLISHED,RELATED -j ACCEPT

# Enable ping

iptables -A OUTPUT -p icmp -m state --state NEW,ESTABLISHED,RELATED -j ACCEPT

iptables -A INPUT -p icmp -m state --state NEW,ESTABLISHED,RELATED -j ACCEPT

iptables -A INPUT -p icmp -m limit --limit 5/s -j ACCEPT

# Enable remote acccess through SSH

iptables -A INPUT -p TCP --dport ssh -j ACCEPT

iptables -A INPUT -p TCP --dport http -j ACCEPT

# ACCEPT DNS

iptables -A OUTPUT -p udp --dport 53 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 53 -j ACCEPT

# Web output (HTTP & HTTPS)

iptables -I INPUT -p tcp --dport 80 -j ACCEPT

iptables -I INPUT -p tcp --dport 443 -j ACCEPT

# Open ports for proxmox input

iptables -I INPUT -p tcp --dport 8006 -j ACCEPT

iptables -I INPUT -p tcp --dport 5900 -j ACCEPT

iptables -I INPUT -p tcp --dport 5999 -j ACCEPT

iptables -I INPUT -p tcp --dport 3128 -j ACCEPT

# Default to DROP

iptables -P INPUT DROP

iptables -P OUTPUT DROP

iptables -P FORWARD DROP

Here, no flux routing yet. My aim is, first, to open all the ports needed for proxmox to work correctly on a safe environment.

Once that script’s executed, I can display proxmox’s Web UI (8006), but cannot log. Proxmox says «Login failed, please try again». When all the rules are flushed away ,everything works fine.

Can someone help?